This is a quick investigation about 1.6611Hz comb in h(t) which might be related to OM2 heater (alog 71108 and 71801 ).

During Tuesday maintenance, I measured the OM2 heater driver at the driver chassis (https://dcc.ligo.org/LIGO-D2000211) using a breakout board while both the Beckhoff-side cable and vacuum-side cable were attached to the chassis and the driver was heating the OM2 (H1:AWC-OM2_TSAMS_DRV_VSET=21.448W). How the chassis, Beckhoff module and in-vac OM2 are connected are in https://dcc.ligo.org/LIGO-E2100049.

Voltages I looked at are:

- Across the positive and negative pin of the Beckhoff drive output that comes into the driver (pin 6-19 on the back DB25).

- Beckhoff positive drive and the driver ground (pin 6-13 on the back DB25).

- Beckhoff negative drive and the driver ground (pin 19-13 on the back DB25).

- Across the driver positive and negative output (pin1-14 on the front DB25).

- Driver positive output and the driver ground (pin 14-13 on the front DB25).

- Driver negative output and the driver ground (pin 1-13 on the front DB25).

I used SR785 (single BNC input, floating mode, AC coupled) for all of the above, and in addition used a scope to look at 2. and 3.

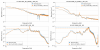

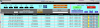

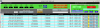

1., 4., 5. and 6. didn't look suspicious at all at low frequency, but 2. and 3. showed a forest of peaks comprising ~1.66Hz and its odd-order harmonics. See the first picture attached, 1.66Hz was derived from 18.25/11, not from the fundamental peak as the fft resolusion was not fine enough for that. (FWIW, frequency of the peaks read off of SR785 are 1.625, 5.0, 8.3125, 11.625, 14.9375 and 18.25Hz). Note that the amplitude is not huge (see the y-axis scale).

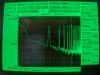

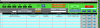

For 2. and 3., if you look at wider frequency range, it was apparent that the forest extended to much higher frequency (at least ~100Hz before it starts to get smaller), and something was glitching at O(1Hz) (attached video). No, the glitch is not SR785 auto-adjusting the frontend gain, it's in the signal.

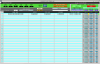

Each glitch was pretty fast (2nd picture, see t~ negative 24ms from the center where there was a glitch of ~2ms or so width) and it's not clear if it's related to the comb. I could not see 1.66Hz periodicity using the scope but, as I already wrote, the comb is not huge in amplitude.

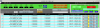

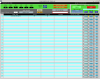

Anyway, these all suggest that the comb is between Beckhoff ground and the driver ground (i.e. it's common mode for Beckhoff positive and negative output). Differential receiver inside the driver seems to have rejected that, we're not pounding the heater element of OM2 with this comb (3rd picture, this corresponds to the measurement 4.).

I cannot say at this point if this is the cause of the comb in h(t) but it certainly looks as if Beckhoff is somehow related.

I haven't repeated this with zero drive voltage from Beckhoff though probably I should have. If the comb is not present in 2. and 3. with zero drive voltage from Beckhoff (I doubt that but it's still possible), we could use an adjustable power supply as an alternative.

The heater driver chassis is mounted on the same rack as the DCPD interface. Maybe we could relocate the driver just in case Beckhoff ground is somehow coupling into the rest of the chassis on the same rack.

It would also be useful to know if something happened to the Beckhoff world at the same time the comb appeared in h(t) apart from that we started heating OM2.