WP12709 Replace EX Dolphin IX S600 Switch

Jonathan, Erik, EJ, Dave:

The Dolphin IX switch at EX was damaged by the 06apr2025 power glitch, it continued to function as a switch but its nework interface stopped working. This meant we couldn't fence a particular EX front end from the Dolphin fabric by disabling its switch port via the network interface. Instead we were using the IPC_PAUSE function of the IOP models. Also because the RCG needs to talk over the network to a swich on startup, Erik configured EX frontends to control an unused port on the EY switch.

This morning Erik replaced the broken h1rfmfecex0 with a good spare. The temporary control-EY-switch-because-EX-is-broken change was removed.

Before this work the EX SWWD was bypassed on h1iopseiex and h1cdsrfm was powered down.

During the startup of the new switch, the IOP models for SUS and ISC were time glitched putting them into a DACKILL state. All models on h1susex and h1iscex were restarted to recover from this.

Several minutes later h1susex spontaneously crashed, requiring a reboot. Everything as been stable from this point onwards.

WP12687 Add STANDDOWN EPICS channels to DAQ

Dave:

I added a new H1EPICS_STANDDOWN.ini to the DAQ, it was installed as part of today's DAQ restart.

WP12719 Add two FCES Ion Pumps and One Gauge To Vacuum Controls

Gerardo, Janos, Patrick, Dave

Patrick modifed h0vacly Beckhoff to read out two new FCES Ion Pumps and a new Gauge.

The new H0EPICS_VACLY.ini was added to the DAQ, requiring a EDC+DAQ retstart.

WP12689 Add SUS SR3/PR3 Fast Channels To DAQ

Jeff, Oli, Brian, Edgard, Dave:

New h1sussr3 and h1suspr3 models (HLTS suspensions) were installed this morning. Each model added two 512Hz fast channels to the DAQ. Renaming of subsystem parts resulted in the renaming of many fast and slow DAQ channels. A summary of the changes:

In sus/common/models three files were changed (svn version numbers shown):

HLTS_MASTER_W_EST.mdl production=r31259 new=32426

SIXOSEM_T_STAGE_MASTER_W_EST.mdl production=r31287 new=32426

ESTIMATOR_PARTS.mdl production=r31241 new=32426

HLTS_MASTER_W_EST.mdl:

only change is to the DAQ_Channels list, added two chans M1_ADD_[P,Y]_TOTAL

SIXOSEM_T_STAGE_MASTER_W_EST.mdl:

At top level, change the names of the two ESTIMATOR_HXTS_M1_ONLY blocks:

PIT -> EST_P

YAW -> EST_Y

Inside the ADD block:

Add two testpoints P_TOTAL, Y_TOTAL (referenced by HLTS mdl)

ESTIMATOR_PARTS.mdl:

Rename block EST -> FUSION

Rename filtermodule DAMP_EST -> DAMP_FUSION

Rename epicspart DAMP_SIGMON -> OUT_DRIVEMON

Rename testpoint DAMP_SIG -> OUT_DRIVE

DAQ_Channels list changed according to the above renames.

DAQ Changes:

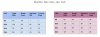

This results in a large number of DAQ changes for SR3 and PR3. For each model:

+496 slow chans, -496 slow chans (rename of 496 channels).

+64 fast chans, -62 fast chans (add 2 chans, rename 62 chans).

DAQ Restart

Jonathan, Dave:

The DAQ was restarted for several changes:

New SR3 and PR3 INI, fast and slow channel renames, addition of 512Hz fast channels.

New H0EPICS_VACLY.ini, adding Ion Pumps and Gauge to EDC.

New H1EPICS_STANDDOWN.ini, adding ifo standdown channels to EDC.

This was a full EDC DAQ restart. Procedure was:

stop TW0 and TW1, then restart EDC

restart DAQ 0-leg

restart DAQ 1-leg

As usual GDS1 needed a second restart, but unusual FW1 spontaneously restarted itself after have ran for 55 minutes, an uncommon late restart.

Jonathan tested new FW2 code which sets the run number in one place and propagates it to the various frame types.

These aftershocks just keep coming!

Had a decent attempt at DRMI and PRMI before this latest one rolled thorugh, but it just wouldn't catch. After another initial alignment, where I had to touch SRM again to get SRY to lock, we're ready to another lock attempt. The ground is still moving a bit though, so I'll try to be patient.

DRMI has locked a few times but we can't seem to make it too far after now. While the ground motion seems to have finally gone away, the FSS isn't locking. Looks like the TPD voltage is lower than where we want, but maybe thats just because it's unlocked?

After futzing with it for 40min it eventually locked after I gave the autolocker a break for ~10min. I actually forgot to turn it back on while I was digging through alogs, but that did the trick!

Another seismon warning of incoming aftershock.

DRMI just locked again! Looks like it's moving on well now so I'll set H1_MANAGER back up.

Re: FSS issues, Ryan S. and I have been having a chat about this. From what we can tell the FSS was oscillating pretty wildly during this period, see the attached trends that Ryan put together of different FSS signals during this time. We do not know what caused this oscillation. The FSS autolocker is bouncing between states 2 and 3 (left center plot), an indication of the loop oscillating, which is also seen in the PC_MON (upper center plot) and FAST_MON (center center plot) signals. The autolocker was doing its own gain changing during this time as well (upper right plot), but this isn't enough to clear a really bad loop oscillation. The usual cure for this is to manually lower the FSS Fast and Common gains to the lowest slider value of -10 and wait for the oscillation to clear (it usually clears pretty quickly), then slowly raise them back to their normal values (usually in 1dB increments); there is an FSS guardian node that does this, but we have specifically stopped it from doing this during RefCav lock acquisition as it has a tendency to delay RefCav locking (the autolocker and the guardian node aren't very friendly with each other).

In the future, should this happen again try manually lowering the FSS gain sliders (found on the FSS MEDM screen under the PSL tab on the Sitemap) to their minimum and wait a little bit. If this doesn't clear the oscillation then contact either myself or Ryan S. for further assistance.