Adjusted curtains

Adjusted curtains

The attached plot shows our typical sawtooth behavior in the timing system. The PPS signals compare the 1 PPS signal from our timing system against other GPS units and the atomic clock. All of these comparisons show this sawtooth indicating it is a feature of our timing system. The master GPS unit which feeds our timing system is a Trimble unit. The GPS_A_PPSOFFSET channel is an internal readback which is read through an RS232. It shows ~80ns single second jumps up and down in synchronization with our sawtooth. This might be an indication that the sawtooth originates in the Trimple. The OCXERROR channels shows the 1 PPS comparison between the timing system and the Trimble unit. It is uses as an error signal for the timing master OCXO. Units are ns except for the OCXERROR which is in seconds. The -1450 ns offset is in the atomic clock.

TITLE: 08/28 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC STATE of H1: Commissioning INCOMING OPERATOR: None SHIFT SUMMARY: Jeff K. ran charge measurements. Sheila fixed a bug from the SUS model change that accounted for additional low frequency noise seen yesterday and this morning. LOG: 15:00 UTC Restarted nuc5. 15:04 UTC Restarted video2. IFO stuck at LOCKING_ARMS_GREEN upon arrival, ETMY needed alignment. 15:20 UTC Kyle to end X VEA. 15:29 UTC Lock loss from CARM_ON_TR. 15:31 UTC Restarted video4. 15:42 UTC Lock loss from DRMI_LOCKED. 15:48 UTC Set ISI config to WINDY_NO_BRSX. 15:49 UTC Lock loss from DRMI_ASC_OFFLOAD. 16:00 UTC Kyle back. 16:14 UTC NLN. Additional low frequency noise present. 16:37 UTC Damped PI modes 27 and 28. 17:13 UTC Karen to warehouse and then mid Y. 17:32 UTC Jeff K. tuning MICH feedforward. 17:38 UTC Lock loss. 17:38 UTC Set ISI config back to WINDY. Leaving in DOWN to let Jeff K. run charge measurements. 17:51 UTC Hugh and Jim to HAM6. 17:59 UTC Hugh and Jim back. Hugh to LVEA. TJ to optics lab. Amber leading tour through control room. 18:27 UTC Hugh back. 18:44 UTC Karen leaving mid Y. 18:48 UTC Sheila restarting ITMY model: SUS and ISI WDs tripped. Charge measurements done. 18:53 UTC Daniel to squeezer bay. 18:58 UTC Relocking, DAQ restart. 19:10 UTC Daniel done, TJ done. Starting an initial alignment. 19:34 UTC Phone call: Hanford monthly alert system test. 19:46 UTC Initial alignment done. 20:26 UTC TJ to optics lab. 20:48 UTC Lock loss. 21:19 UTC Bubba and visitor to LVEA. 21:20 UTC Jeff B. to end stations and mechanical room (not VEAs) to check dust monitor pumps. 21:25 UTC NLN. Jeff K. starting BSC ISI charge measurements. 21:28 UTC Bubba back. 21:36 UTC Jeff K. done charge measurements. 21:40 UTC Bubba to H2 electronics building. 21:57 UTC Mike and Fred taking visitor into LVEA. 21:59 UTC Corey taking visitor to overpass. 22:08 UTC Jeff B. done. 22:17 UTC Lock loss (Jeff K. driving HAMS), HAM2 ISI, IM1, IM2, IM3 tripped. 22:45 UTC Mike, Fred and visitor back. 22:53 UTC Timing system error, SYS-TIMING_X_PPS_A_ERROR_FLAG: error code briefly at 1024 (PPS 1 OOR)

The following units have been balance calibrated as per LIGO-T1600116-v1:

#132740069233007, #132740069233002 and 132140069233006

The unit ending in 007 had been currently in use on the optic in the LSB Bonding lab and was found to be out of spec as defined in the procedure listed above. I was able to adjust it into an acceptable balance as per procedure.

#132740069233004 failed to meet the tolerances as stated in the above procedure.

There is another unit in the Bonding Lab garbing area that has no regulator. I didn't get the number off that one

The data for these units may be found here

J. Kissel First regular charge measurement after O2; last regular charge measurement before the the Test Mass Discharge System (TMDS) tomorrow on ETMX. Trends are consistent with everything that has been true since the July 6th 2017 EQ (I've added by-hand, eye-ball trend lines to guide the eye). The reason I've been wishy-washy on claiming whether there was a jump in effective bias voltage: some quadrants don't show a change after July 6th, others do, and it depends on the suspension. We should really take the time to convert these results into a geometric display of the optic face #between02and03squadgoals. I won't display the relative actuation strength plots any more, since we don't really care when we're not in an observation run and they don't show much more information than the effective bias voltage plots. If someone still wants to see them, just post a comment to this log with your request.

Jonathan, Dave:

We have disabled the remote LHO CDS login permit system, Yubikey holders can now log into LHO CDS without having to get the operator to open a permit. The access system is running in monitor-only mode, remote logins are being displayed on the CDS Overview MEDM.

Sheila, Dave:

Sheila corrected and restarted the h1susitmy model. The change required a DAQ restart, which was done.

2017_08_28 11:53 h1susitmy

2017_08_28 12:00 h1broadcast0

2017_08_28 12:00 h1dc0

2017_08_28 12:02 h1fw0

2017_08_28 12:02 h1fw1

2017_08_28 12:02 h1fw2

2017_08_28 12:02 h1nds0

2017_08_28 12:02 h1nds1

2017_08_28 12:02 h1tw1

The problem was a typo -- the ITMX FF control signals were going to both ITMX and ITMY. This is likely the cause of our poor ~20-200 Hz performance overnight. Now fixed and on our way back up through initial alignment.

Nothing to report other than the normal, weekly current adjustments and the marginally twitchy chiller plots.

Concur with Ed, all looks normal.

Based on some concern about various clipping possibilities in EndY, Travis S. removed irises in the EY Rx side on Aug 15 (see WP 7111; this says EX but was fixed to say EY in paper book). When Sudarshan evaluated calibration data after this (alog 38235), he found that Rx calibration values had returned to "non-clipping" values from prior to end of May.

I wanted to confirm that this was true over time, not just on the day of calibration, so I pulled up the last 6 months on Dataviewer; see results in the attached photo. It seems the Rx PD does flatten out over the 2 weeks since iris removal.

Rick, Travis, and I are currently under the assumption that this indicates clipping at the iris due to small apertures being aligned at high incidence angles. However, this is far from conclusive and will need to be monitored for several more weeks or months. This also does not provide any clues as to the cause of the monotonic drop in output voltage since June.

First I got the IFO to DC Readout so that IR is resonant in both arms, then opened the ALS shutters and misaligned PZT2 on each arm according to ALOG 35979.

The reference images were taken at 04:13:00 UTC (21:13 PST) on 2017-08-28. Then power up occurred at 04:21:50 UTC and reached nominal power at 04:24:30 UTC.

I've shuttered the ALS at 05:42:00 UTC and reset the PZT loops to their nominal bias values.

On friday Thomas and I ran excitations on all 4 ESDs in order to measure the 4 coefficients in this equation for the force applied by the ESDs (G1600699) Equation 2:

F = α (V_b-V_s)2 +β (V_b+V_s)+ β _2(V_b-V_s)+γ (V_b+V_s)2

The first 2 excitations for each suspension were on the bias path with no signal voltage so that the linear response is:

F = [β+β_2+2(α+γ)V_b] δV_b

The bias path was excited twice, first with the normal bias of 380 V on and second with no bias. Then the script excited the signal path which gives a linear response of:

F = [β-β_2+2(α+γ)V_b+2(α-γ)V_s] δV_s

This path is excited 3 times, once with no DC offset on any electrodes, once with an offset of 7.6Volts on the signal electrodes, and once with the bias at its normal value of 380V.

The first 4 of these measurements allow us to make a complete measurement of all four coefficents, α, β β_2, and γ The fifth measurement is redundant and could be skipped, I used it as a sanity check. I am still worried that I have some signs wrong which are making these results confusing.

| ETMX | ETMY | ITMX | ITMY | |

| α (N/V^2) | 2.1e-10 | 2.0e-10 | 8e-11 | 5.5e-11 |

| β(N/V) | -9.9e-10 | 2.0e-8 | 1.0e-8 | 5.6e-9 |

| β_2 (N/V) | 2.8e-9 | 3.2e-8 | -4.2e-9 | -8.5e-9 |

| γ | 1.5e-10 | 1.2e-10 | -4.0e-11 | -1.2e-11 |

| V_eff = (beta-Beta2)/(2*(alpha-gamma)) | -31 V | -75 V | 60 V | 105 V |

All of the scripts needed for taking the measurement and getting the coefficents are in userppas/sus/common/quad/scripts/InLockChargeMeasurements/

The table above is wrong because of multiple minus signs (which are different between the ITM and ETM ESD drivers) being wrong. Here is a corrected table:

| ETMX | ETMY | ITMX | ITMY | |

| α (N/V^2) | 9.6e-11 | 8.7e-11 | 4.9e-11 | 5.8e-11 |

| β(N/V) | -1e-9 | 2e-8 | -1e-8 | -5.6e-9 |

| β_2 (N/V) | 2.8e-9 | 3.2e-8 | 4.2e-9 | 8.5e-9 |

| γ | 2.6e-10 | 2.3e-10 | -9.2e-12 | -1.5e-11 |

| V_eff = (beta-Beta2)/(2*(alpha-gamma)) | 12 | 38 | -124 | -97 |

The values in this table still had some calibration errors. New log coming soon.

1515 -1915 hours local -> Kyle R. on site At around 1800 hrs. local as I was walking between the VPW and the OSB, I happened upon 15 non-escorted people, all wearing matching T-shirts taking a group photo by the BT section display outside of the exterior OSB Control Room Hallway door. They said that were with "AldeBaran alph Tau" from the Czech Republic and were touring the Western USA - mostly scientific points of interest. They demonstrated a familiarity with LIGO and had already made it past the "ISIS" gate, so I gave them an impromptu tour. I escorted them into the Control Room (Sheila D. present) then on to the overpass. Afterwards, I walked them to their cars. They hoped to call on Monday and arrange for an "actual" tour. They also said that LHO was their last stop before flying out of Portland OR. in two days time.

WP7120 Sheila and Dave:

The h1lsc model was modified to add two new SHMEM IPC senders. The h1omc model was modified to add two new SHMEM receivers (for the new h1lsc channels) and two new PCIE (Dolphin) sender channels. Each of the SUS ITM models were modified to add one new PCIE receiver channels to receive from h1omc.

The models were compiled and installed, the modifications to H1.ipc is shown below.

The models were restarted in the order: h1lsc, h1omc, h1susitmx, h1susitmy.

Because IPC receivers had been added, their slow channels were added to the DAQ INI file, requiring a DAQ restart.

After the DAQ was restarted, I discovered two things:

1. I forgot to check the write status of h1tw1 before restarting. I have checked that h1tw1 was writing raw minute trends every 5 minutes.

2. The EDCU did not come back to a GREEN status. The reason is that a new Guardian node ALS_DIFF_ETMY_ESD had been created (and added to the Guardian DAQ INI file) but is not being ran. We'll fix this tomorrow, for now the EDCU is RED, and it is missing these 19 guardian channels. The disconnected channel list is shown below.

ALS_DIFF_ETMY_ESD Guardian DAQ channels (disconnected):

H1:GRD-ALS_DIFF_ETMY_ESD_VERSION

H1:GRD-ALS_DIFF_ETMY_ESD_EZCA

H1:GRD-ALS_DIFF_ETMY_ESD_OP

H1:GRD-ALS_DIFF_ETMY_ESD_MODE

H1:GRD-ALS_DIFF_ETMY_ESD_STATUS

H1:GRD-ALS_DIFF_ETMY_ESD_WORKER

H1:GRD-ALS_DIFF_ETMY_ESD_LOAD_STATUS

H1:GRD-ALS_DIFF_ETMY_ESD_ERROR

H1:GRD-ALS_DIFF_ETMY_ESD_CONNECT

H1:GRD-ALS_DIFF_ETMY_ESD_EXECTIME

H1:GRD-ALS_DIFF_ETMY_ESD_STALLED

H1:GRD-ALS_DIFF_ETMY_ESD_NOTIFICATION

H1:GRD-ALS_DIFF_ETMY_ESD_NOMINAL_N

H1:GRD-ALS_DIFF_ETMY_ESD_REQUEST_N

H1:GRD-ALS_DIFF_ETMY_ESD_STATE_N

H1:GRD-ALS_DIFF_ETMY_ESD_TARGET_N

H1:GRD-ALS_DIFF_ETMY_ESD_OK

H1:GRD-ALS_DIFF_ETMY_ESD_ARCHIVE_ID

H1:GRD-ALS_DIFF_ETMY_ESD_TIME_UP

H1.ipc additional channels:

[H1:LSC-OMC_ITMX]

ipcType=SHMEM

ipcRate=16384

ipcHost=h1lsc0

ipcModel=h1lsc

ipcNum=59

desc=Automatically generated by IPCx.pm on 2017_Aug_27_13:36:33

[H1:LSC-OMC_ITMY]

ipcType=SHMEM

ipcRate=16384

ipcHost=h1lsc0

ipcModel=h1lsc

ipcNum=60

desc=Automatically generated by IPCx.pm on 2017_Aug_27_13:36:33

[H1:OMC-ITMX_LOCK_L]

ipcType=PCIE

ipcRate=16384

ipcHost=h1lsc0

ipcModel=h1omc

ipcNum=385

desc=Automatically generated by IPCx.pm on 2017_Aug_27_16:09:43

[H1:OMC-ITMY_LOCK_L]

ipcType=PCIE

ipcRate=16384

ipcHost=h1lsc0

ipcModel=h1omc

ipcNum=386

desc=Automatically generated by IPCx.pm on 2017_Aug_27_16:09:43

Here is the start log for this afternoon's changes:

2017_08_27 16:16 h1lsc

2017_08_27 16:18 h1omc

2017_08_27 16:18 h1susitmx

2017_08_27 16:20 h1susitmy

2017_08_27 16:23 h1broadcast0

2017_08_27 16:23 h1dc0

2017_08_27 16:23 h1fw0

2017_08_27 16:23 h1fw1

2017_08_27 16:23 h1fw2

2017_08_27 16:23 h1nds0

2017_08_27 16:23 h1nds1

2017_08_27 16:23 h1tw1

These model restarts were intended to allow us to send the DARM signal to the ITMs. Previously, the LSC model had PCIE IPCs to the ITMs, while for the ETMs the LSC had shared memory IPCs to send the signals to the OMC model, where they are summed with the DARM signals and send to the end stations using RFM IPC.

Today Dave and I modified the models so that the ITM signals would be routed in a way more similar to the ETMs, so that the LSC has PCIE SHM IPCs to send the signals to the OMC model, then PCI IPCs to send the signals to the ITMs.

We were able to relock fine after this, but the LSC feedforward which is routed through the new IPCs is not well tuned. For MICH, we used to operate with a filter gain of -15.9, today I got some decent MICH subtraction (not well tuned) with a gain of +600. For SRCL we started to get a small amount of subtraction with a gain of around 22, while our nominal gain was -1.

I don't understand why this is happening, but will leave the IFO to Thomas Vo for some Hartman tests. Hopefully we look at what happened early in the morning.

I came in to lock the IFO for Adam's injections which are scheduled for 2 hours from now, (38380), but have had about 4 BS ISI ST1 CPS trips in the last hour.

Two of these might have happened while the BS was isolating stage 2 (I have not double checked) but at least one happened while we were just sitting in CHECK_IR (no feedback to the BS sus, ISI state should not have been changing). The screenshot attached is for that most recent trip in CHECK_IR

Edit: Made it to a fully locked interferometer and was engagning ASC when it happened again, again it was a glitch in the ST1 H3 CPS. Second screenshot

Edit again: This seems very similar to the situation described in 37499, 37522 the third screenshot shows the glitches getting worse over the last 1:40 minutes. Jim describes power cycling the satlite racks which seemed to make the problem go away last time.

Another update: I power cycled the 3 top chassis in the CER rack SEI C5, these are labeled BSC ISI interface chassis and have cables going to BSC2 CPSs, GS13s and L4C's. The ISI has not tripped in about 20 minutes, I am relocking but paused to damp violin modes.

No glitches since ~2230utc 26 Aug. Made note in FRS 8517.

Sheila plans to come in and lock the IFO this afternoon, so that I can try to finish these detchar safety injections. I've schedule 7 injections, the first one will begin at 16:06:22 PDT, and the last at 16:51:22 PDT. Here is the update to the schedule:

1187824000 H1 INJECT_DETCHAR_ACTIVE 0 1.0 detchar/detchar_27July2017_PCAL_{ifo}.txt

1187824450 H1 INJECT_DETCHAR_ACTIVE 0 1.0 detchar/detchar_27July2017_PCAL_{ifo}.txt

1187824900 H1 INJECT_DETCHAR_ACTIVE 0 1.0 detchar/detchar_27July2017_PCAL_{ifo}.txt

1187825350 H1 INJECT_DETCHAR_ACTIVE 0 1.0 detchar/detchar_27July2017_PCAL_{ifo}.txt

1187825800 H1 INJECT_DETCHAR_ACTIVE 0 1.0 detchar/detchar_27July2017_PCAL_{ifo}.txt

1187826250 H1 INJECT_DETCHAR_ACTIVE 0 1.0 detchar/detchar_27July2017_PCAL_{ifo}.txt

1187826700 H1 INJECT_DETCHAR_ACTIVE 0 1.0 detchar/detchar_27July2017_PCAL_{ifo}.txt

The IFO should be left out of observation mode. Also, I'm not sure if the PCALX 1500Hz line gets turned back on, but this also needs to be turned off. Simply typing the following on the command line will do the trick:

caput H1:CAL-PCALX_PCALOSC1_OSC_SINGAIN 0

caput H1:CAL-PCALX_PCALOSC1_OSC_COSGAIN 0

The interferometer is locked in Nominal low noise and the PCAL X 1500 kHz line is off as of 22:55 UTC. I hope that the BS ISI will not trip during the injections. (alog 38382)

The interferometer unlocked just before 1187825964, so I think that 4 or 5 of these injections would have completed before it unlocked. I don't know why it unlocked.

According to the guardian log (see attached), the first 5 of these injections made it in. The fifth one ended at 1187825913 (about 50secs before the lockloss). This should be enough injections for now. Thank you Sheila for coming in on a Saturday afternoon and locking the IFO for us!

Short version: I made omega scans for the 5 sets of injections that were done above andyou can find them here: https://ldas-jobs.ligo-wa.caltech.edu/~jrsmith/omegaScans/O2_detchar_injections/ (note that after the first set the rest of sub-directories of the start time for each set).

Long version: The Injection files are here: https://daqsvn.ligo-la.caltech.edu/svn/injection/hwinj/Details/detchar/. These are timeseries sampled at 16384 Hz and they start at the injection times listed in the original entry above. Times of the injections start 0.5s after times given in alog, and are separated by 3s each. Some simple Matlab to plot (attached) the injection file and print a list of times is:

% Plot hardware injections

fnm = 'detchar_27July2017_PCAL_H1.txt';

data = load(fnm);

t = 0:1/16384:(length(data)-1)*1/16384;

figure;

plot(t,data)

xlabel('time [s]')

ylabel('amplitude [h]')

title(strrep(fnm,'_','\_'))

orient landscape

saveas(gcf,'injections.pdf')

% Print times of injections

t_start = 1187824000+0.5;

times = t_start:3.0:t_start+106;

fprintf('%.2f

',times)

To submit the omega scans I used wdq-batch like so: (gwpysoft-2.7) [jrsmith@ldas-pcdev1 O2_detchar_injections]$ wdq-batch -i H1 times-1.txt, then submitted the resulting dag to Condor.

OmegaScan results are here: https://ldas-jobs.ligo-wa.caltech.edu/~jrsmith/omegaScans/O2_detchar_injections/

These will take some hours to run and we will then look at the results to see if there are any obvious unsafe channels. In addition we'll look statistically using hveto.

This is the equivalent comment to the comment for L1's detchar injections hveto safety analysis on page 35675

I ran hveto_safety on the injections mentioned above, looking for coincidences within 0.1sec time window.

The results of the analysis using >6 SNR omicron triggers can be found here: https://ldas-jobs.ligo-wa.caltech.edu/~tabbott/HvetoSafety/H1/O2/safetyinjections/D20170826/results/H1-HVETO_omicron_omicron-1187823990-125/safety_6.html

The configuration for the analysis can be found here: https://ldas-jobs.ligo-wa.caltech.edu/~tabbott/HvetoSafety/H1/O2/safetyinjections/D20170826/results/H1-HVETO_omicron_omicron-1187823990-125/H1-HVETO_CONF-1187823990-125.txt

I missed that there we 4 extra sets of injections, so I've redone the hveto safety analysis on the complete set of 180 injections.

TITLE: 08/25 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC STATE of H1: Commissioning INCOMING OPERATOR: None SHIFT SUMMARY: Last shift of O2! No issues until Beckhoff SDF died during Adam's detchar safety injections. Jonathan has restarted h1build and it is currently running a file system check. Intention bit set to commissioning. Observatory mode set to commissioning. IFO at NLN. LOG: 16:02 UTC Powercycled video2. MEDM screen updates were intermittent. 16:07 UTC Restarted the range integrand DMT that is displayed on video0. It had been running locally on video0 instead of through ssh on nuc6. 17:23 UTC Gave Adam M. remote access to cdsssh and h1hwinj1. 18:50 UTC GRB verbal alarm. Confirmed with LLO. 15:00 UTC End of O2! Set observatory mode to calibration. Changed INJ_TRANS guardian node to INJECT_SUCCESS. 22:43 UTC Adam M. starting detchar safety injections. Set intent bit to commissioning. HFD to mid X to check on RFAIR box. ~23:09 UTC Beckhoff SDF died. IFO_LOCK guardian node went into error (lost connection to SDF channels). Adam's injections interrupted. Jonathan investigating. 23:23 UTC Jonathan restarted h1build and it is running a file system check. Adam canceling remainder of injections. Transitioned observatory mode to commissioning.

15:00 UTC should be 22:00 UTC.

[Aidan]

I've been investigating the apparent increase in range that occurred this morning when CO2X was turned off. This would seem to indicate that either (a) the CO2 laser is somehow misaligned/or deformed and causing a very poor lens, or (b) the present level of lensing (SELF heating + CO2) is too much. It's worth noting that the requested (and delivered) levels of CO2X laser power haven't changed significantly over the last four or five months.

If we assume (b) and also that the better range in the past is partially due to a better thermal state, then the conclusion is that either the effective CO2 lens or the SELF heating lens has increased.

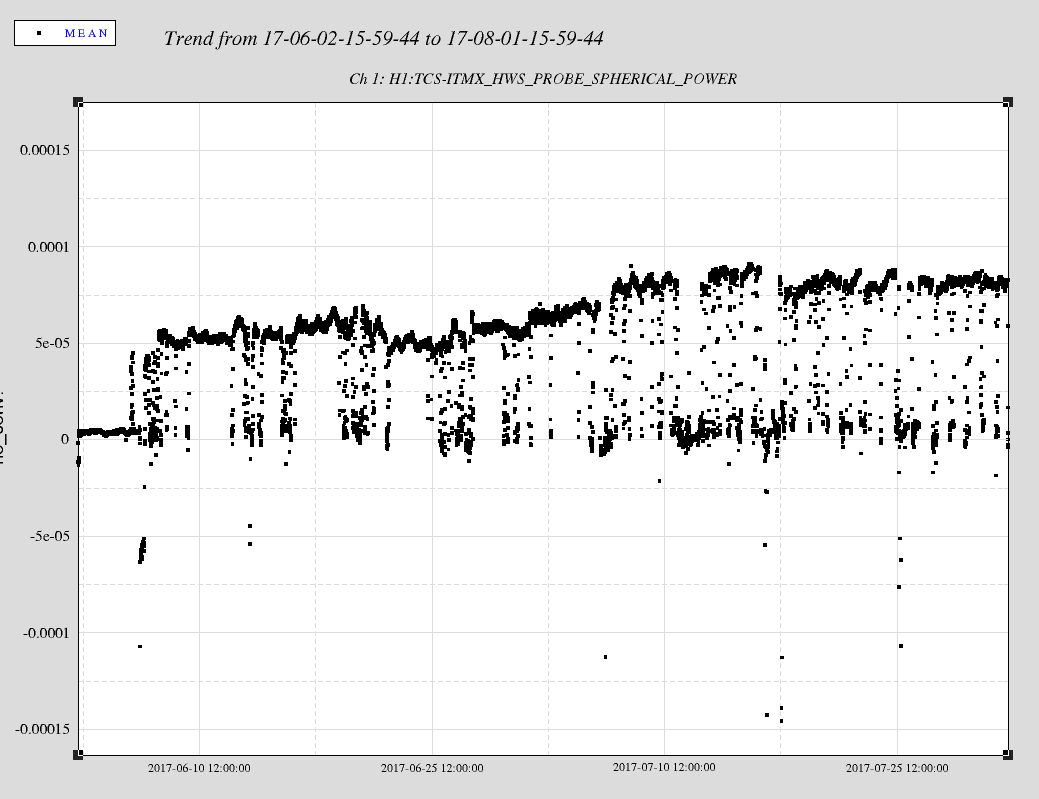

I've gone back and looked at the HWSX spherical power data (H1:TCS-ITMX_HWS_PROBE_SPHERICAL_POWER) from two months around the period of the earthquake. The relevant value here is the spherical power change per lock (the difference between the upper and lower levels that are obvious in the time series which shows the transient lens from SELF heating and CO2 laser changes). There is an interesting period form 22-June to 7-July where this trends upwards by approximately 25 micro-diopters (or about 50%). This indicates that the apparent lens change per lock seen by the HWS is increasing significantly.

Two factors also play into this. (1) the CO2 laser is being turned on and off during this time (to keep the thermal state warm when the IFO has lost lock). However there is no significant change in the CO2 power level behaviour over the corresponding time range. (2) we know there is already a point absorber on ITMX as well as uniform absorption. The spherical power value is fitted to the low spatial-frequency lens. The presence of a known high spatial frequency point in the wavefront complicates this. For example, it's possible that the HWS alignment may be drifting and the point absorber is contributing more to the total spherical power value. This obviously needs to be investigated further by looking at the stored gradient fields.

However, it does seem that there is a real effect going on as the range got much better (~15% better) when the CO2 laser turned off.

One thing to note is that if the IFO beam is moving across the surface of the optic (specifically, across the point absorber), then we would see a 50% increase in the amount of absorbed power if the IFO beam moved by dr = 7-10mm. This is based upon the estimate of the point absorber at a radius (r_point) of something like 36-44mm from the center of the optic. (see aLOG 35071 and aLOG 34868)

Specifically: dP_abs = exp(-2*[(r_point - dr)/w0]^2) - exp(-2*[(r_point)/w0]^2)

relative_change = dP_abs/exp(-2*[(r_point)/w0]^2)