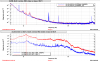

M 6.5 - 36km W of Yongle, China 2017-08-08 13:19:49 UTC 33.217°N 103.843°E 10.0 km depth Lockloss at 14:05 UTC. It seems the System Time for Seismon stopped updating at 1186191618 GPS, Aug 08 2017 01:40:00 UTC, yet its System Uptime and Keep Alive are still updating. 14:10 UTC ISI platforms started tripping. Transitioned ISI config to LARGE_EQ_NOBRSXY. Tripped: ISI ITMY stage 2 ISI ETMY stage 2 ISI ITMY stage 1 ISI ITMX stage 1 ISI ITMX stage 2 ISI ETMY stage 1 SUS TMSY Leaving ISC_LOCK in DOWN. Starting maintenance early. 14:18 UTC Set observing mode to preventive maintenance.

I don't quite know what happened with seismon. I updated the USGS client yesterday and restarted that code, but I didn't make any changes to the seismon code. I've restarted the seismon_run_info code that we are using and that seems to have fixed it. Maybe the seismon code crashed when I added geopy yesterday?