[Jenne, Kiwamu, Vaishali with help from JimW and JeffK]

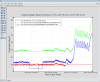

Continuing the locking effort from yesterday (36197), we managed to get the mode cleaner to lock. Here's a roll down of the events from today:

1. We (Jenne and I) first aligned the MC2 REFL camera because we were almost on the edge of the PD.

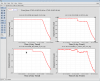

2. As this didn't fix the not locking problem, we asked Kiwamu for help and then we looked at a bunch of parameters like filters, gain thresholds, ramp times. We also looked to check if the suspensions were behaving correctly and then found the mirror which had been turned off. This button (MC2 M2) was not in use at all. Maybe we should have double checked the sdf differences but we know better now.

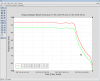

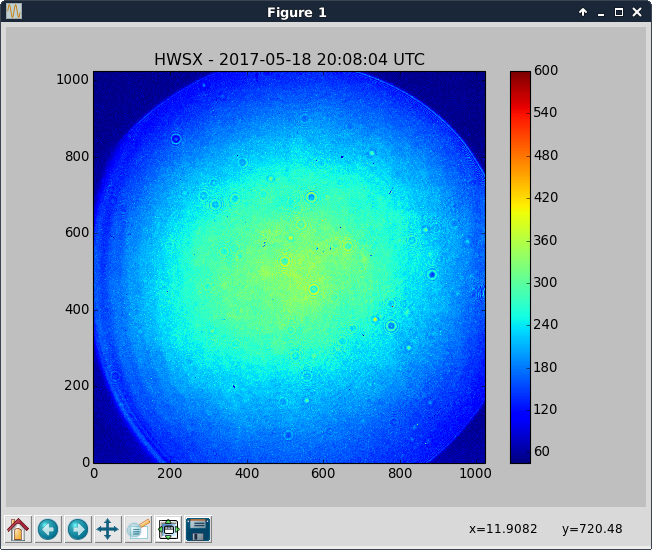

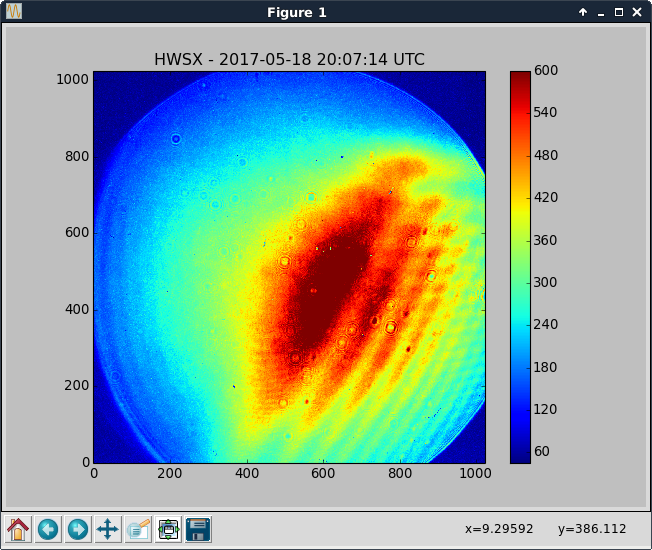

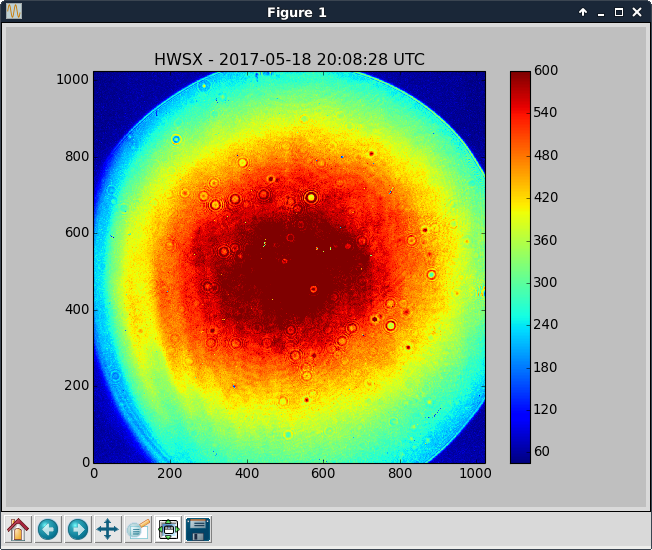

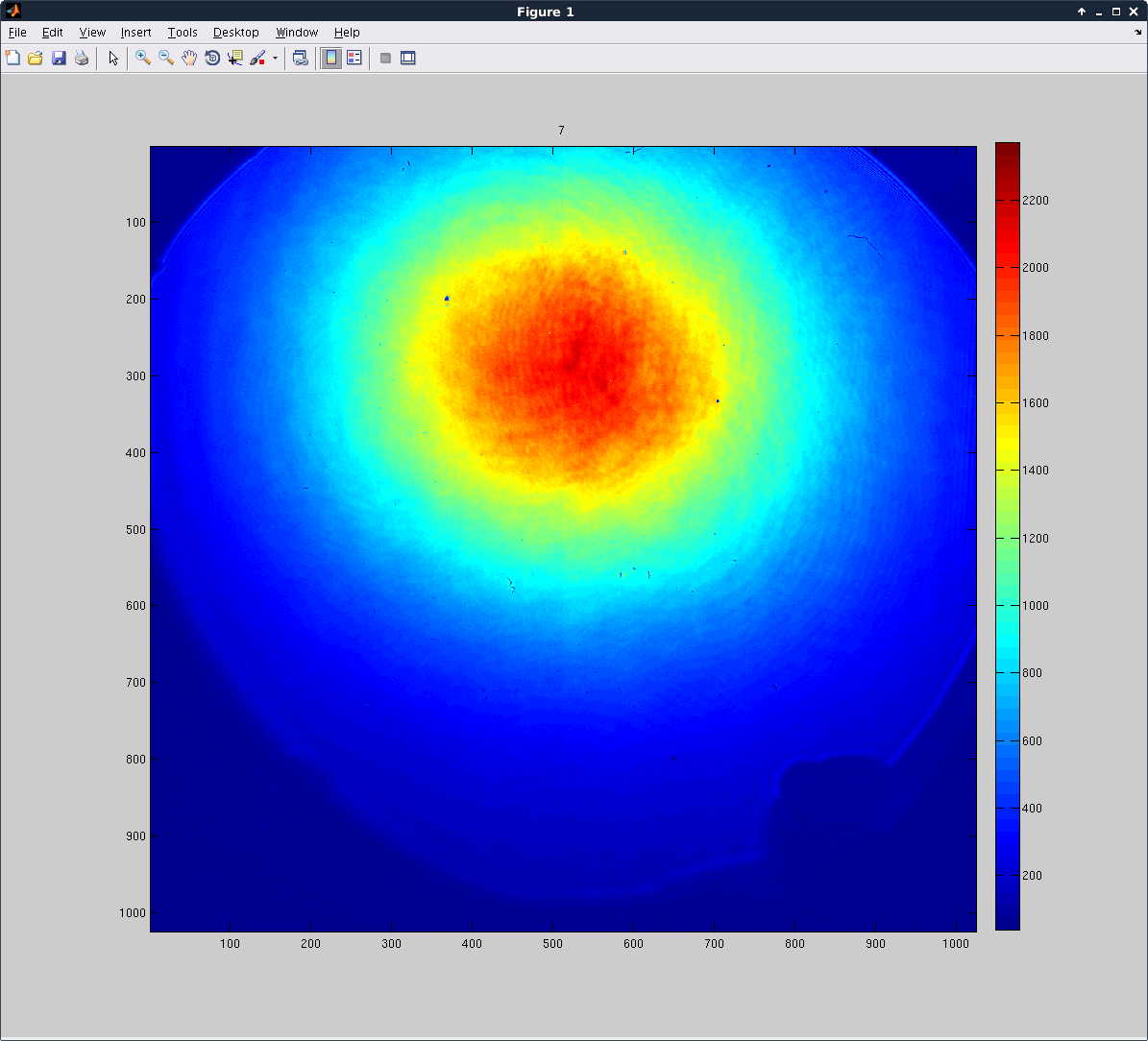

While we were aligning the MC2 REFL we noticed that MC2 Trans wasn't looking like what it used to. We tried to trace the beam and found that the camera was being illuminated by a ghost beam and not the actual transmission beam of the MC. We found a bright spot by looking into the light pipe and then found that the beam wasn't coming onto the telescope at all. As we couldn't see anything on the viewer card, we turned up the laser power to 10 W and found the beam again in the light pipe only with the IR viewer.

Jenne then tried to gently tap the light pipe (this is the same pipe that had problems yesterday and was fixed) with me looking at the bright spot and it didn't move at all which leads to us believe that the beam might be hitting the edge of the table somewhere.

After hypothesising out loud that this might have been because the tables hadn't returned to the correct positions, we were corrected by JimW who told us that the ISIs return back to their positions on their own.

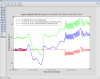

We then tried to change the axis of the modecleaner in order to redirect the beam in the light pipe but we weren't too successful.

Not having solved this mystery of what happened to MC Trans, we concluded the work for today with a modecleaner that locks at 2 W and 10 W.

Jenne will correct me if I have missed something or used wrong names of mirrors in comments !