After getting wack values for PI mode ring ups (many orders of magnitude off from expected) earlier in the week, I've refit ring ups and taken new ones and gotten much more reasonable values (I wasn't looking at long enough time stretches to get accurate ring up data before). Note that we haven't had any instability in Mode3 in many days so I haven't been able to remeasure it.

| Mode # | Freq | Optic | tau | Q |

| 3 | 15606 Hz | ITMX | TBD | TBD |

| 26 | 15009 Hz | ETMY | 316 s | 15 M |

| 27 | 47495 Hz | ETMY | 92 s | 5 M |

| 28 | 47477 Hz | ETMY | 89 s | 5.2 M |

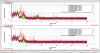

Mode 3 hasn't been unstable in many days and Modes 27 & 28 are only unstable during the initial ~ 1-2 hours of transient. Attached are two 30 hour stretches of the damping output of the three modes during recent long locks (Mode3 had no output so I left off) and the simulated HOOM spacing to get an idea of when the modes are ringing up enough to engage damping loops. Left is a few days ago and right is the current lock. Note that Mode26 looks continuously unstable during the 11/16 lock, but it could be that the damping gain is triggering below an actual unstable amplitude; compare to the current lock where we set the gain for Mode26 to zero just after 19:30 (so there would be no damping output) but it has remained stable and low since then with no need to damp.

Operators and myself are currently turning off gain and measuring ring ups during different times of lock stretches to get gains at different stages of the thermal transient.

Current damping status of this now > 25 hour 32 W lock: no damping required after the first 2 hours. Attached plots again show damping loop output over the past 26 hours and HOOM spacing for reference.