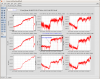

Here are some noise estimates for our current configuration (at 25W). The PMC PZT noise was measured before tuesdays fix, if I can get some time I will remeasure that tonight.

These curves are a combination of excess power measurements, gwinc curves for thermal and residual gas, and Evan Hall's noise budget code.

Obvious things that are still missing are frequency noise and ESD DAC noise.

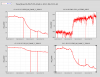

Looking at the noise budget above, we should be limited by shot noise around 100Hz, so increasing the power should expose the noise more. I decided to try 35Watts to see what we can learn.

We locked at 50Watts with similar settings 2 weeks ago without problems, but there were a few things to work out for locking at 35Watts:

- PI have been OK, both TJ and Ed adjusted the phases for mode27

-

Some of our ASC tuning for 25 Watts doesn't work for 35Watts

- The change from POPX to REFL WFS didn't work at 35 Watts. For now I left the beam diverter open, which is probably hurting the noise around 100Hz.

- The hard loops are in a hybrid state right now that works and isn't too bad for noise. TJ and I put code in LOWNOISE_ASC that should do most of this, the only thing missing is that for DHARD_Y the guardian will still turn on the 40Watt cutoff (FM1) which is not stable. JLP35 (FM8) is fine.

- The SRM loop is open, so I've touched the alingment by hand. I tried the signals we have been using at 25 Watts but it is not good.

- The sideband powers started to drop gradually over 30 minutes or so. The 45MHz sideband powers were different by about a factor of 2 when I went into the LVEA to look at the OSA. Turning up the CO2X power brought the sideband build ups back to what they normally are, and improved the sideband imbalance slightly. We just took another step up in CO2X power (to 0.4Watts), and TJ will check on the imbalances in a while. We can't find the ethernet adapter to download the data from the scope.

- The noise from 150Hz up is more peaky at 35Watts, confirming what we saw once before on Oct28th. The noise around 100Hz and below is not terribly different, so it probably is a usefull exercise to take a look at a 35Watt configuration (with SRCL and MICH FF tuned) and try to measure a noise budget here, especially for frequency noise.

- The reason the low frequency noise is worse in the attached spectrum is probably not something to worry about, we should run a2l, and tune feedforward before deciding if that noise is better or worse.