Tasked by Kiwamu and Stefan to turn random knobs in a hope of getting rid of 100-1kHz bump, I was able to gain 15 Mpc by adding -0.05 offset to CSOFT pitch and yaw. I haven't had a chance to try every ASC knobs due to the power glitch. We were at 75 Mpc before the IFO went down.

Here's a note on everything I've tried (all time in PT):

3:40 added/subtracted 0.02 counts to H1:LSC-MOD_RF45_AM_RFSET -- Nothing improved

3:46 Added +6 gain to PRC1 LSC -- 27 Hz line became visible. But later found this line to come and go so this could totally be a coincidence

3:52 Changed CO2X power to 1W -- quickly dropped the power back to nominal when I realized that it's probably going to take a while to see the result

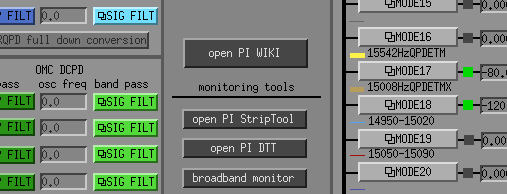

4:38-4:58 [In ASC land] Played with CSOFT pitch and yaw offset -- Settled at -0.05 for both. Slightly improved overall DARM spectrum but made 20-30 Hz wrose. Gained 15 Mpc in range here (if it's not calibration that's fooling with me). Any more negative number added would decrease AS90/POP90.

5:06 Added -10 to CHARD pitch -- nothing improved

5:13 Added -0.07 to CSOFT pitch -- nothing improved

5:14 Added -20 offset to CHARD yaw. -- Nothing improved

5:17 Added offset to DHARD pitch -- Nothing improved

5:27 Added offset to DSOFT pitch -- Nothing

5:48 Added -0.05 offset to DSOFT yaw -- Increased AS90/POP90 but no obvious approvement in DARM.

5:58 Added -25 offset to INP1_Y -- improved AS90/POP90 but no apparent improvement in DARM spectrum

6:08 Power glitch. Meh.

I also moved PR3 and changed something on SRC1 but didn't write down details (since it probably was not doing anything).

I put everything back in place before the power glitch except for the CSOFT gain that worked. I turned off all the gain buttons that I turned on except for CSOFT P and Y but not neccesary return the gain number back to what they were. I have them all written down though.

The analogue input voltages were never changed from the factory settings. The settings that were changed were the fault and warning settings for high and low temps. These should have remained after power cycling the chillers though.