Liyuan Zhang (CIT), PeterK, JasonO, RickS

LLO has had some pre-modecleaner (PMC) issues. S/N 08, their original unit, was removed due a "glitchy" PZT and the replacement unit, S/N 10 degraded quickly, apparently due to hydrocarbon contamination.

During the past week we have assembled a setup in the "Triples" lab (upstairs in the Staging building) to evaluate the performance of PMCs and, eventually, to repair them.

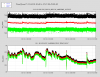

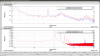

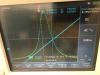

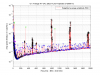

The setup is show in the photographs below. Basically, we modematched a 2-W Innolight NPRO to the cavities which were removed from the tanks. We used a Pcal integrating-sphere-based power sensor to measure incident, transmitted, reflected, and leakage power (through the two flat miirrors, M3 and M4). We have ordered an amplitude-modulating EOM for making transfer function measurements to measure the bandwidth, but for these tests whe just measured the FWHM of the Airy profile by sweeping the laser frequency.

Removing the PMC from the tank turned out to be pretty challenging because the PMC bodies were stuck to the viton sheets at the bottom of the tanks. We devised a method for removing them that works pretty well. One can use the tank mounting blocks, the PMC body clamping bolts, and some Newport or Thorlabs "B2" bases to put upward pressure on the PMC. On the one cavity we tested, it released within a minute or two. (See attached photo).

The measured performance of the "glitchy" PMC is broadly consistent with what Liyuan measured at Caltech. Here we measured average losses per mirror of about 80 ppm. We don't yet have an uncertainty estimate for this setup yet.

The estimated losses for the "dirty" PMC is about 1400 ppm per mirror.

Measurement details are in the .pdf file, attached.

We plan to repeat these measurements and estimate uncertainties. However they clearly indicate that the "dirty" cavity is much more lossy than the "glitchy" cavity. About 15% of the incident power is lost inside the cavity, vs. about 1% for the "glitchy" cavity.

Visual inspection of all four mirrors (from the outside) on both cavities did not reveal any noticable contamination or damage.