the attached files show the current status of the front end code.

the attached files show the current status of the front end code.

ER8 Day 11. No restarts reported

(All times UTC)

Summery:

Evan and Stefan were working on recentering the AS WFS when I arrived. When they were done, Evan cleared out his changes in the SDF aside from some ASC diff that occurred from dark offset scripts. I accepted the ODC Master changes from alog 20915.

Guardian brought the IFO into a full lock really quick, Evan added some DCPD Whitening, and it has stayed in lock since.

Locking:

Itbit set at 10:48 UTC with long lock hopes.

HWS SLED IX is ON

Having the HWS SLED on should not effect the interferometer, I added this into the guardian because we don't want the SLED running indefinately, but it is easy to turn it on and forget. If the warning message is confusing we could remove it, or move it to a less obtrusive place.

Presumably this check is coming from the DIAG_MAIN node. I think the important principle for that node should be that all the diagnostic checks should be actionable, in other words someone should get out of their chair and deal with whatever the notification is to make the notification stop. If the plan is to leave the HWS SLED on, or it's not critical to shut it off, then it should be removed from DIAG_MAIN.

Maybe we can add a second DIAG node for not immediately actionalbe diagnostics, but my guess it that it will just be ignored, since by definition nothing there will be critical.

Stefan, Evan

We made another attempt at using 90 MHz to center WFS AS B.

This time we turned up the whitening gain to 45 dB, which gave a healthy number of counts on each quadrant. We then redid our dark offsets, which were significant at this whitening gain. [We actually redid the dark offsets for all the REFL and AS WFS signals using the script in the userapps repo.]

Then in DRMI, we phased each quadrant to put most of the power in I. At this point we saw that the centering signals provided by these 90 MHz quadrants (pitch and yaw) were slightly different than the dc centering signals for AS B, so we switched the centering over to these signals (a relative gain of −0.003 is required between these 90 MHz signals and the dc signals). Things seemed fine in DRMI (the sideband buildups all changed slightly, but it was hard to say whether they were better or worse), but when we tried the CARM reduction sequence, the new centering ran away on the DARM WFS step.

So instead, we went to full lock at 2 W with the old centering scheme and switched over again to the new 90 MHz AS B centering. Things again seemed fine.

We weren't quite sure what to do about the BS/SRM matrix elements. In the end we tried using AS B 36 I&Q only to control these loops (i.e., no mixture with AS A 36), and this was fine at 2 W, but we lost lock immediately when trying to power up.

C. Cahillane, D, Tuyenbayev In a previous alog alog 20946 I showed preliminary carpet plots of ER7 strain uncertainty based on changes in the calibration parameters |kappa_tst|, φ_kappa_tst, |kappa_pu|, φ_kappa_pu, kappa_C, f_c. Here are some updated plots. (Plots 1 - 6) The data is now low-noise, and is dewhitened. This gives much nicer, more accurate results. I have been working to reproduce Darkhan's carpet plots from T1500422. The method he uses to find error in Delta_L_ext is as follows: delta Delta_L_ext = C_true / (1 + G_true) ------------------------------ C / (1+G) where C_true and G_true are the quantities where kappa_tst, kappa_C, and f_c are varied. The method I use to find error in strain is: delta strain = (1 / C_true) * d_err + A_true * d_ctrl ---------------------------------------------- (1 / C) * d_err + A * d_ctrl Plot 7 is Darkhan's method of computing error from changes in kappa_tst reproduced by me. Plot 1 is my method of computing error from changes in |kappa_tst|. They should be the same. They aren't. We are searching for potential reasons behind the structure found in Darkhan's plot. Something odd may be going on at the UGF of the CLG, causing the low magnitude error near 65 Hz and low phase error near 35 Hz. My plot, on the other hand, has no low error region in phase, in fact it has a maximum near 35 Hz, and has a tilted low magnitude error region between 30 and 40 Hz. I am investigating this difference now.

23:00 (16:00) Darkhan and Travis at end Y running PCAL measurements. Leo running charging measurements for ETMX. 23:36 (16:36) Kiwamu to electronics room to measure transfer function of whitening filter 23:40 (16:40) Evan to CER to run measurement with network analyzer 23:41 (16:41) Darkhan and Travis done at end Y, moving to end X 00:09 (17:09) Nutsinee to TCS X to take picture of control box 00:15 (17:15) Nutsinee back 00:44 (17:44) Evan done, Leo done 01:18 (18:18) Travis and Darkhan done at end X 01:37 (18:37) Travis and Darkhan back 02:13 (19:13) Kiwamu and I start locking Trouble locking on DRMI Ran through initial alignment starting with CHECK_IR Lost lock engaging ASC WFS in LOCK_DRMI, Kiwamu had to revert phase settings for AS 36 Lock loss on bounce mode 03:17 (20:17) h1fw2 crashed and restarted 04:48 (21:48) Locked on Low Noise, Darkhan running calibration measurements 05:22 (22:22) Darkhan done, Stefan and Evan starting wiggling cables test 05:26 (22:26) Kiwamu to CER to clean up previous work Kiwamu is back 06:22 (23:22) h1fw2 crashed and restarted 07:00 (00:00) handing off to TJ, Evan and Stefan are investigating RF Other end Y dust alarms smelled a lot of smoke in hallway, went up to roof, didn't spot any fires

J. Kissel, K. Kawabe Similar to what is shown and briefly mentioned in LLO aLOG 18406, I've done a comprehensive transfer function study between the DAC and ADC Duotone timing signals from the following IO chassis: h1lsc0 (which houses the ADC for the OMC DCPDs), h1susex & h1susey (which house the DACs for the ETM QUADs) h1iscex & h1iscey (which house the ADC for the PCAL RXPDs) h1oaf0 (which receives the DARM_ERR and DARM_CTRL channels from the OMC model over IPC) Recall that these Duotone signals are merely *checks* on the system that is actually providing the time signals for the DAC / ADC cards, which is the 1 PPS signal from the local timing fanout in the building (which in in turn receives its 1 PPS from the timing master in the corner station which is GPS synchronized). The results indicate that -- although there is a few tens of micro-second offset between all of these front-ends, they appear to not *differ* by more than a few hundred nano-seconds, and typical in the tens of nano seconds. This is consistent with what Shivaraj found when making the comparison between his l1iscex and l1iscex against l1oaf0. Recall that our requirement is that the timing uncertainty is no greater than 25 [us], so at least the DuoTone signals between each IO chassis fall in well-below this. What we would need to do to confirm that the actual front end timing is to then compare this DuoTone against the "digital world" 1 PPS, as Keita has done for the ADC and DAC DuoTone signals for all of the above mentioned chassis in LHO aLOG 20962. His aLOG also indicates a ~100 [ns] difference between chassis, on top of the ~7 [us] offset. So far, so good in timing land. Details: --------- For all measurements, I used h1lsc0 ADC and DAC duotone singals as the reference (TF denominator), and the others as the response (TF numerator). Below are the results for the phase relationship between each at 960 [Hz] (the results are the same within the precision stated for the 961 [Hz] line): xxxx ADC xxxx DAC xxxx ADC xxxx DAC -------- -------- -------- -------- LSC0 ADC LSC0 ADC LSC0 DAC LSC0 DAC h1lsc0 n/a -26.42 +26.42 n/a h1susex +5.14 -21.27 +26.45 +5.14 h1susey +5.13 -21.28 +26.44 +5.13 h1iscex +0.008 -21.29 +26.45 +5.13 h1iscey +0.028 -21.27 +26.47 +5.14 h1oaf0 -0.013 -21.34 +26.43 +5.07 which we can turn in to an equivalent delay (or advance) between the two front end timing signals, with 1e6 * (pi/180) * phase_deg * / (2*pi*961 [Hz]) xxxx ADC xxxx DAC xxxx ADC xxxx DAC -------- -------- -------- -------- LSC0 ADC LSC0 ADC LSC0 DAC LSC0 DAC [us] [us] [us] [us] h1lsc0 n/a -76.4 76.4 n/a h1susex 14.9 -61.5 76.5 14.9 h1susey 14.8 -61.6 76.5 14.8 h1iscex 0.023 -61.6 76.5 14.8 h1iscey 0.081 -61.5 76.6 14.9 h1oaf0 -0.038 -61.7 76.5 14.7 Templates live in /ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/ER8/H1/Measurements/Timing/ 2015-08-27_H1LSC0_to_CALCS_DuoTone_TFs.xml 2015-08-27_H1LSC0_to_ISCEND_DuoTone_TFs.xml 2015-08-27_H1LSC0_to_SUSEND_DuoTone_TFs.xml of which I attach an example.

Sudarshan, Darkhan

Overview

Today we took PCALX to DARM and PCALY to DARM TF measurements.

These measurements will be used to assess time delays/advances between Pcal excitation/readout channels vs. DARM_IN1.

Details

Earlier today we took PCAL(X|Y) to DARM TF measurements for estimating timing of Pcal channels vs. DARM_IN1. We noticed that in today's earlier measurements "2015-08-27_PCALX2DARMTF_TIMING.xml" and "2015-08-27_PCALY2DARMTF_TIMING.xml" used log.spaced frequency vectors. Since later in the day we got another opportunity to measure these transfer functions, we repeated earlier measurements but this time taking a linearly spaced frequency vector.

So the most recent measurements taken at a lin.spaced frequency vectors are (notice that they also include earlier log.spaced measurement TFs and coherences as ref0 and ref1):

All 4 measurements have been uploaded to calibration SVN (r1161):

CalSVN/aligocalibration/trunk/Runs/ER8/H1/Measurements/PCAL/2015-08-27_PCALX2DARMTF_linspace.xml

CalSVN/aligocalibration/trunk/Runs/ER8/H1/Measurements/PCAL/2015-08-27_PCALX2DARMTF_TIMING.xml

CalSVN/aligocalibration/trunk/Runs/ER8/H1/Measurements/PCAL/2015-08-27_PCALY2DARMTF_linspace.xml

CalSVN/aligocalibration/trunk/Runs/ER8/H1/Measurements/PCAL/2015-08-27_PCALY2DARMTF_TIMING.xml

Charge measurements was done on both ETMs. It seems like ETMY change the sign of charging after changing the bias sign on ETMY (see 20387) while ETMX charging is the same (as well as the bias sign). Now (since Aug,10) both ETMX and ETMY Biases are -9.5V, Plots are in attachment.

Since we turn teh ESD in X off during a lock stretch, could it be that ETMX has been used less since the start of ER8?

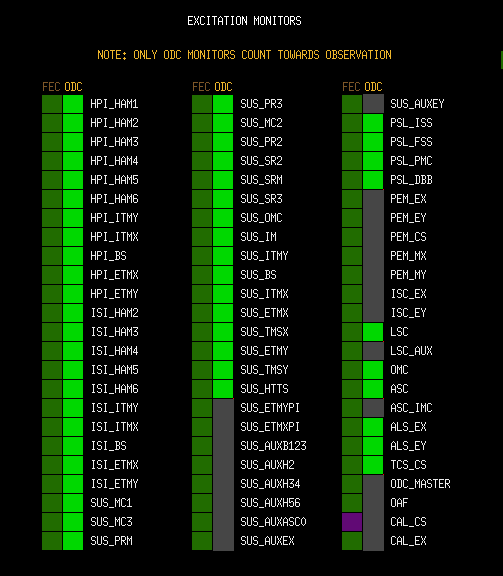

New EXCITATION_OVERVIEW screen has been created:

$USERAPPS/sys/common/medm/EXCITATION_OVERVIEW.adl

Hopefully this can help in tracking down excitations that could be preventing the system from entering READY:

The two columns show the front-end excitation monitors (FEC, left) and the ODC monitors (ODC, right).

NOTE: ONLY ODC monitors count towards OBSERVATION READY at the moment.

The FEC monitors are there for reference, and for completeness.

The EXCITATIONS box in the OBSERVATION_OVERVIEW screen now contains a link to this screen.

I updated this screen to remove the PEM_M* and ODC_MASTER checks, and added the missing CAL_EY.

Evan, Sheila

While the IFO was down for some calibration measurements, we made another attempt at phasing AS36.

We first redid the dark offsets, this was an important step. Then we locked the bright michelson with 22 Watts of input power.

We steered the beam onto each quadrant of AS_A by maximizing the DC counts, then phased 36 to minimize Q. There were several things that made this seem much more promising than any of our previous attempts to phase these WFS:

Both of these things are firsts for these WFS as far as I'm aware, so this seemed like real progress.

We started to do the same procedure for AS_B 36, but after we phased the first quadrant the phase jumped. Kiwamu was in the rack working on the calibration measurements of the DC PDs at this time and reported that he had plugged a signal into the patch panel. After this I looked at the A signals again, and they did not make sense any more. I tried repeating the procedure above for A, and found that the phases needed to minimize Q with the light maximized on each quadrant had changed by -15, -10, 0, and -20 degrees for quadrants 1,2,3,4.

It seems like we need to make a thorough check of the HAM6 racks before we continue trying to make sense of AS WFS.

Just for the record the momentarily sane phasings were:

| segment | phase (degrees) |

| 1 | -155 |

| 2 | -175 |

| 3 | -165 |

| 4 | -158 |

Stefan and I did a wiggling test of the cables in the HAM6 rack while the interferometer was locked.

We watched AS90I, AS45Q, and all the quadrants of the AS WFS (36 and 45 MHz). The only thing we saw was a 5% fluctuation in AS90I in response to the 90 MHz LO cable being wiggled. [Although once the beam diverter is closed and the AS90 signal is attenuated, the response to wiggling is much stronger—something like 20% to 40% fluctuation.]

C. Cahillane I have managed to use ER7 data produce preliminary carpet plots of frequency vs. strain magnitude and phase based on the Uncertainty Estimation paper T1400586. The code that generates these plots may be found here: /ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/S7/Common/MatlabTools/strainUncertainty.m Darkhan recently did a similar study to this looking at error in magnitude and phase of Delta_L_ext when kappa_C, kappa_A, and f_c changed. My study currently looks at the error in magnitude and phase of strain when the magnitude and phase of kappa_tst and kappa_pu vary, as well as kappa_C and f_c. (Recall that in general kappa_tst and kappa_pu can be complex.) Right now I believe there is a serious error in the code, because the plot of the optical gain (plot 5) and the plot of the cavity pole (plot 6) show there is absolutely no error in strain even if these values differ greatly from the expected value. The cavity pole varies by up to +- 100 Hz and does not vary by more than 1%. The result is robust: I have calculated the strain using two independent methods and still I get these odd results. These are my results right now, and this is why I call these plots preliminary. I do believe the magnitude kappa_tst (plot 1), phase kappa_tst (plot 2), magnitude kappa_pu (plot 3), and phase kappa_pu (plot 4) plots look sensible. Any phase in kappa_tst is generally intolerable for high frequency phase information, while phase in kappa_pu yields high error in low frequency phase information. Since we do not expect any phase component at all in kappa_tst and kappa_pu, this makes sense. Also, the magnitude of kappa_tst and kappa_pu must be tracked carefully at high and low frequency respectively. 10% errors in these magnitudes are enough to give more than 9% errors in strain magnitude. Note that these results use ER7 data (GPS_start = 1116990382). I'll soon be able to get ER8 data when it is all available (go calibration week!)

I believe my "no uncertainty" issue with the optical gain and cavity pole may be related to this graph. The 1/C*d_err term in blue is completely overwhelmed by the A*d_ctrl term. My reconstruction of hMag may be improperly weighting these two factors. That is why the Actuation terms (kappa_tst and kappa_pu) have sensible errors, but the Sensing terms (kappa_C and f_c) don't have any effect whatsoever.

I have posted some less preliminary plots of the ER7 data. I have now dewhitened the data, which has properly scaled the gain such that the inverse sensing term is no longer overwhelmed by the actuation term. The spikes everywhere are due to a single-pass fft I have taken. I am working on a proper fft algorithm now.

I have created a DTT template that makes it easier to decide when it's okay to turn on more OMC DCPD whitening.

Evan wrote an alog some time ago about the new OMC DCPD whitening on/off guardian states (alog 20578), and Cheryl and Evan made some notes on when it's okay to go to these new states (alog 20787).

As of right now, the guardian will automatically turn on one stage of whitening, but we get better high frequency noise performance if we add a second stage. However, if some mode (eg. a violin mode) is rung up, then we can't add the second stage of whitening without being in danger of saturating the ADC. So. The new DTT template should help decide when it's okay to add the second stage.

The template is /ligo/home/ops/Templates/dtt/DCPD_saturation_check.xml (screenshot below). The template should be run after we have arrived at NOMINAL_LOW_NOISE for the main lock sequence. If the dashed RMS lines are below the green horizontal line, it's okay to add the second stage of whitening.

To engage the second stage of DCPD whitening:

Open the full list of guardian states for the OMC_LOCK guardian, and select "ADD_WHITENING". It will take a minute or two, and automatically return to the nominal "READY_FOR_HANDOFF" state.

could you explain the math & logic a little bit more?

I would have thought that an RMS of 3000 cts is as high as we want to go. Increasing the RMS by a factor of 10 would make it so that its always saturating = not OK. Or isn't this IN1 channel the real ADC input?

Yes, 3000 ct rms = 8500 ct pkpk = too many counts to add a second stage of whitening.

We run with about 10 mA dc on each DCPD, which shows up as 13000 ct or so of dc on the IN1 channels. That means we have something like 19000 ct of headroom before the ADCs saturate on the high side (+32 kct). Assuming the ac fuzz is symmetric about the mean, saturation will certainly occur if the ac is greater than 38000 ct pkpk with two stages of whtening, or 3800 ct pkpk with one stage of whitening.

That's why the criterion I've been using for turning on a second stage of whtening is to look at the IN1 channels and verify that the ac is less than 3000 ct pkpk, or 1000 ct rms when there is only one stage of whitening on. If we find the DCPDs saturating too often with two stages, we should be even more restrictive.

Evan Stefan Daniel

The second EOM driver was installed in the CER using the 9MHz control and readback channels. The first attached plot shows the DAQ readback signals. Both drivers show the similar noise levels for the in-loop and out-of-loop sensors. They are also coherent with each other as well as ASC-AS_C! The in-loop noise is clearly below which would indicate that the signal is suppressed to the sensor noise. The measured out-of-loop noise level is also a factor of 4 higher than the setup in the shop.

The second plot shows the same traces but this time the ifr is feeding the EOM driver in the CER. As expected its out-of-loop noise level is now consistent with measurements in the shop and no longer coherent with the unit in the PSL.

We were starting to suspect that we are looking at down-converted out-of-band noise...

Using a network analyzer, we took the following measurements:

The first four of these are shown in the attached plot [the OCXO has been multiplied by 5 in frequency for the sake of comparison]. The message is that the 45.5 MHz in the IFO distribution system has huge, broad wings out to 2 MHz away from the carrier. These are not seen on the IFR, the harmonic generator on the bench, or the 9.1 MHz in the distribution system.

Although the EOM driver still works to suppress some of the RFAM below 50 kHz, the broad wings still contribute significantly to the rms; most of it is accumulated above 200 kHz offset from the carrier. This is shown in the second attachment.

I looked again at some rf spectra in the CER.

These peaks appear on every output of the harmonic generator, even when it is not driving any distribution amplifiers (just a network analyzer).

These peaks also appear even when the harmonic generator is driven by +12 dBm of 9.1 MHz from an IFR (not from the OCXO + distribution amplifier).

This suggests we should focus on the harmonic generator or its power supply.

Patrick, Sheila, Jenne, Eric For the first part of the test, we injected our fiducial CBC waveform (same one used in ER7) and tried raising the LIMIT value on the hardware injection block in order to address saturation problems observed in ER7. During ER7, the LIMIT was 200. We raised it to 400. The first injection did not go through: 1124601535 1 1.000000 cbctest_1117582888_ intent bit off, injection canceled Patrick, Sheila, and Jenne tried to turn on the intent bit, but there was some sort of problem, which will be alog'ged separately. As a temporary work-around, we turned off the tinj intent-bit check and injected again: 1124602724 1 1.000000 cbctest_1117582888_ successful Patrick determined that the injection produced a maximum |amplitude| of 15 counts coming out of the injection block, which seemed to indicate that the original LIMIT value of 200 was sufficient. However, an alarm went off to indicate that there was saturation at ETMY. Thus, the saturation problem cannot be solved by tinkering with the INJ block in MEDM. Rather, the problem is occurring downstream on the ETM actuators. We request that Jeff K, Adam M, et al. look into options for avoiding saturation at the ETMs. Next we tried a blind injection using the new blind injection code. The blind injection code does not log injections in EPICS so they are not automatically picked up in the segment database. 1124603111 1 1.000000 cbctest_1117582888_ successful The blind injection was clearly visible. The ETM saturation warning went off again. The injection was logged correctly in the blind injection blindinj_H1.log: current time = 1124603049... Attempting: awgstream H1:CAL-INJ_BLIND_EXC 16384 /ligo/home/eric.thrane/O1/Hardw areInjection/Details/Inspiral/H1/cbctest_1117582888_H1.out 1 1124603111 Injection successful. All of these injections were carried out with scale factor = 1; (that's the 1.000000). The injection file, described in a comment below, is a 1.4 on 1.4 BNS, optimal orientation, at D=45 Mpc. It is the same waveform used in previous ER tests.

It looks like the injection actually does hit the 400 count limit (plot 1). It saturates right at the end when the injection chirps up to high frequency. There's some kind of ringing as well (plot 2). From the spectrogram (plot 3) and the zoom (plot 4) this looks like a feature at just above 300 Hz. I thought it might be a notch for the PCal line, but that's 331.9 Hz. So someone will have to check the inverse actuation filter and see what's happening at that frequency. It's possible to see the overflow from the first injection in the ETMY L3 MASTER channel (plot 5). It happens at -131072 counts, and the injection is trying to push it past -200000. The blind injection caused an overflow as well, but since this channel is only recorded at 2048 Hz, it looks like it falls short of overflow (plot 6). There's a faster readback whose name escapes me at the moment. Unless the blind injection is made a factor of about 10 smaller, or rolled off at high frequency, it will be trivial to detect it by looking at the drive to the ETM.

FYI, the injected waveform was fiducial waveform from ER7: https://alog.ligo-la.caltech.edu/aLOG/index.php?callRep=16125 It's a 1.4-1.4 BNS at 45 Mpc, optimal orientation.

There are a couple of things to watch out for when performing CBC hardware injections, based on iLIGO experience:

For the ER7 injection we used an SEOBNRv2 waveform that has a ringdown at the end, hoping that this turn off would not trigger an impulse. However, for BNS masses, the turn off and ringdown is pretty sharp. I've asked Chris check that there are no "whooper" effects with the SEOBNRv2 waveform, but we haven't had chance to do this yet. For a SpinTaylorT4 waveform (the other waveform CBC wants to inject), there will definitely be a step, so this needs to be checked and rolled off carefully.

One other comment on the test: what scaling in awgstream did you use? That waveform looks monstously loud (eyeball SNR > 20). That's much louder than would be useful for a blind injections, but good for helping us find whooper effects.

Duncan, the scale factor is 1.

Just for completeness, because I didn't see it posted, here's an Omega scan of the injections in h(t). The first is the non-blind injection, the second is the blind injection. I think the glitch ten seconds after the blind injection is unrelated. I thought it might be a filter turning off or being reset, but it's not on a GPS second (it's at 1124603210.28). It does cause an overflow of the ETMY ESD DAC.

I verified that the blind injection was correctly recorded in the raw frame file.