Evan, Dan, Jeff

The SR3 M1 T2 actuator is...unwell.

We started to have trouble staying locked after power up about four hours ago. At first it looked like an ASC problem in the SRC, and since the AS36 loops are the scapegoat du jour, Evan started to retune the SRC1 loop. He observed a recurring transient in the beam spots at the AS port, and saw the same thing in the SR3 oplevs. We turned off the SR3 cage servo and the kicks kept on coming.

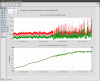

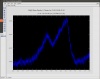

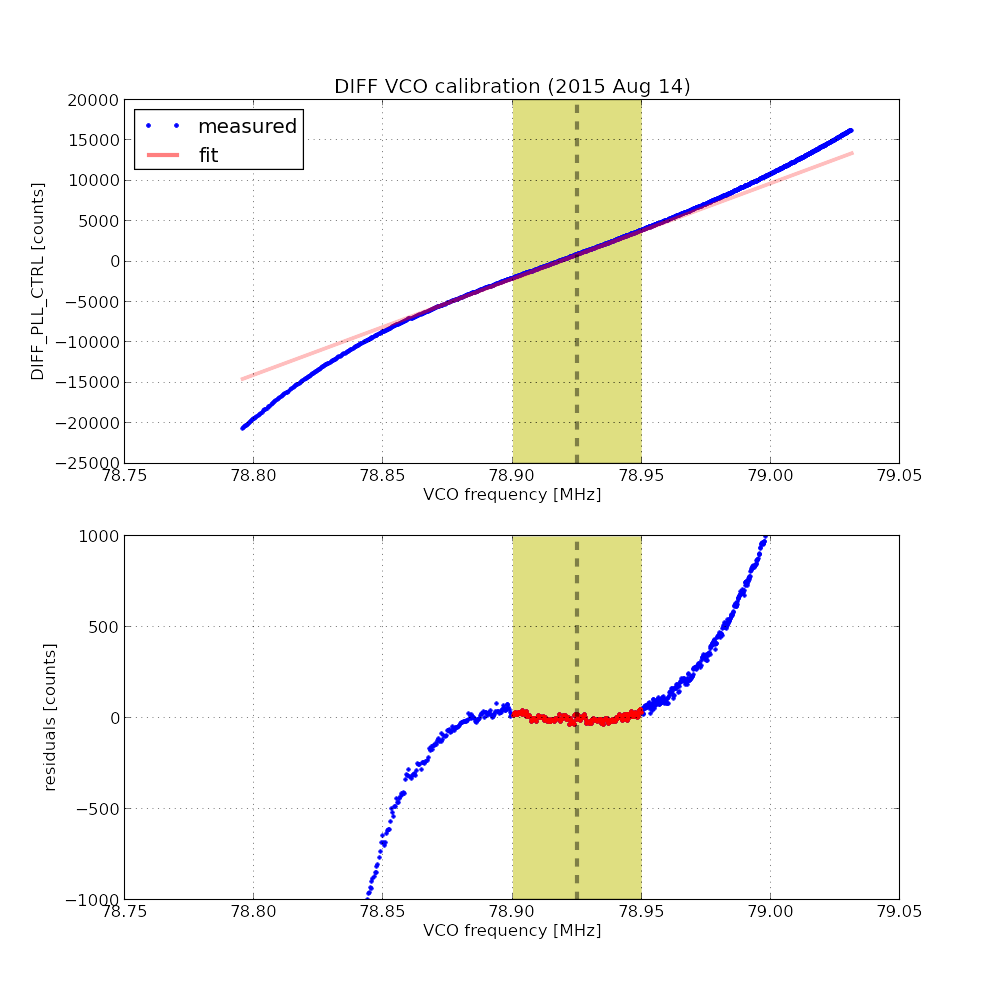

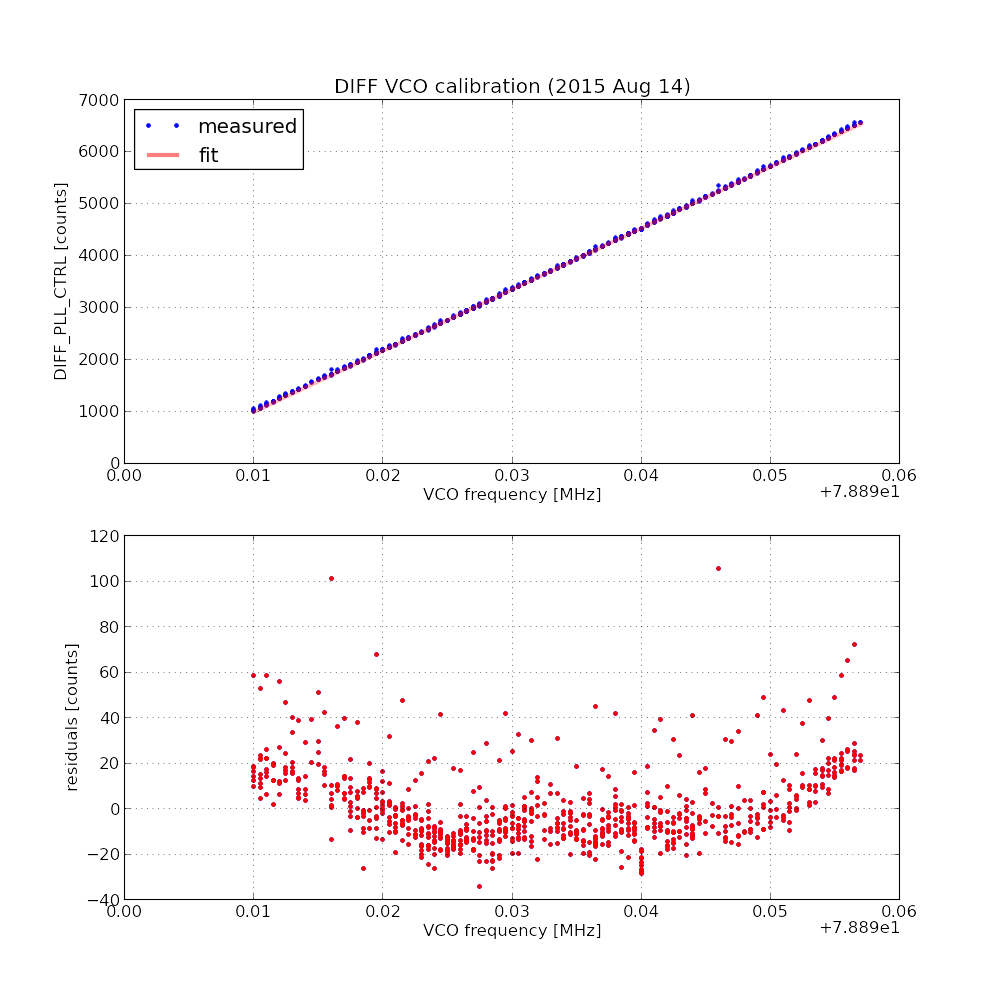

Eventually we looked at the SR3 M1 voltmons and found the first plot attached, which is for eight hours. The T2 OSEM and voltmon started to get ratty about three and a half hours ago. The noise is a series of slow transients with a several-second rise and a steep decay. See Fig. 2.

We're pretty convinced that it's an actuator problem, something between the DAC and the voltmon readback in the coil driver box. We have unlocked the IFO and turned off the SR3 top-stage damping and we still see the pattern of noise. We shall leave the pleasure of power-cycling the AI chassis and coil driver to others.

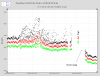

As an amuse bouche for the maintenance day team, we also discovered that the SR3 M1 T1 voltmon is complete nonsense. The T1 voltmon time series is a collection of step functions and spikes, 100x larger than the other M1 voltmons.

The SR3 M1 damping has been turned back on.

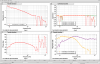

It would appear that the glitches come and go but tend not to be gone for too long, but enough to slow down trouble shooting. In and effort to get things moving I replaced the Triple Top driver S1001082 with S1001086 as the first effort at fixing this problem. Do to a lot of activity (it is Tuesday) it was hard to see if this fixed the problem. As can be seen from Dave B. report we also restarted the IOP in make sure a calibration occurred. Had what appeared to be glitches in the signals that turned out to be sei trips. So again not the easiest item to follow up on. After the system settled down I have not seen any excess noise on T2 for over and hour. But will continue to monitor.

Seems like we've been good for the past 2.5 hours or so.