J. Kissel

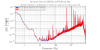

Using the DARM OLGTF model that we've updated the CAL-CS and GDS low-latency calibrations, I've generated an inverse actuation function for the hardware injections. This is an update to the filter designed for the LHO mini-run (see LHO aLOG 18115). The updated filter has been loaded into FM2 of the H1:CAL-INJ_HARDWARE filter bank, as well as the H1:CAL-INJ_BLIND filter bank, BUT NOT YET TURNED ON. It will be turned on tomorrow during maintenance.

Recall that this filter should be assigned the same uncertainty as is true for the DARM Open Loop Gain transfer function (see LHO aLOG 18769) -- 50% and 20 [deg], mostly because of the frequency dependent discrepancy between the model vs measured DARM open loop gain transfer function. Indeed we are now quite certain that the model vs. measurement discrepancies / features seen at ~500, ~1000, ~1500 [Hz] are inaccurately modeled violin modes of the ETMY QUAD. Further, we're confident that we can *remove* these features from future actuation functions by retuning the ETMY UIM / PUM / TST control authority, which we will do immediately following ER7. Er, well, immediately following the vent that immediately follows ER7. So we expect future DARM OLGTFs and therefore inverse actuation function uncertainty to be much less.

Details

-----------

I've used the following DARM OLGTF model,

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/PreER7/H1/Scripts/DARMOLGTFs/H1DARMOLGTFmodel_ER7.m

with the following parameter set

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/PreER7/H1/Scripts/DARMOLGTFs/H1DARMparams_1116990382.m

to produce a new Inverse Actuation Function for the HARDWARE Injection path, ultimately created by and plotted with

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/PreER7/H1/Scripts/DARMOLGTFs/CompareDARMOLGTFs.m

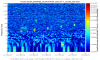

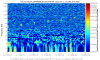

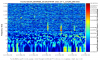

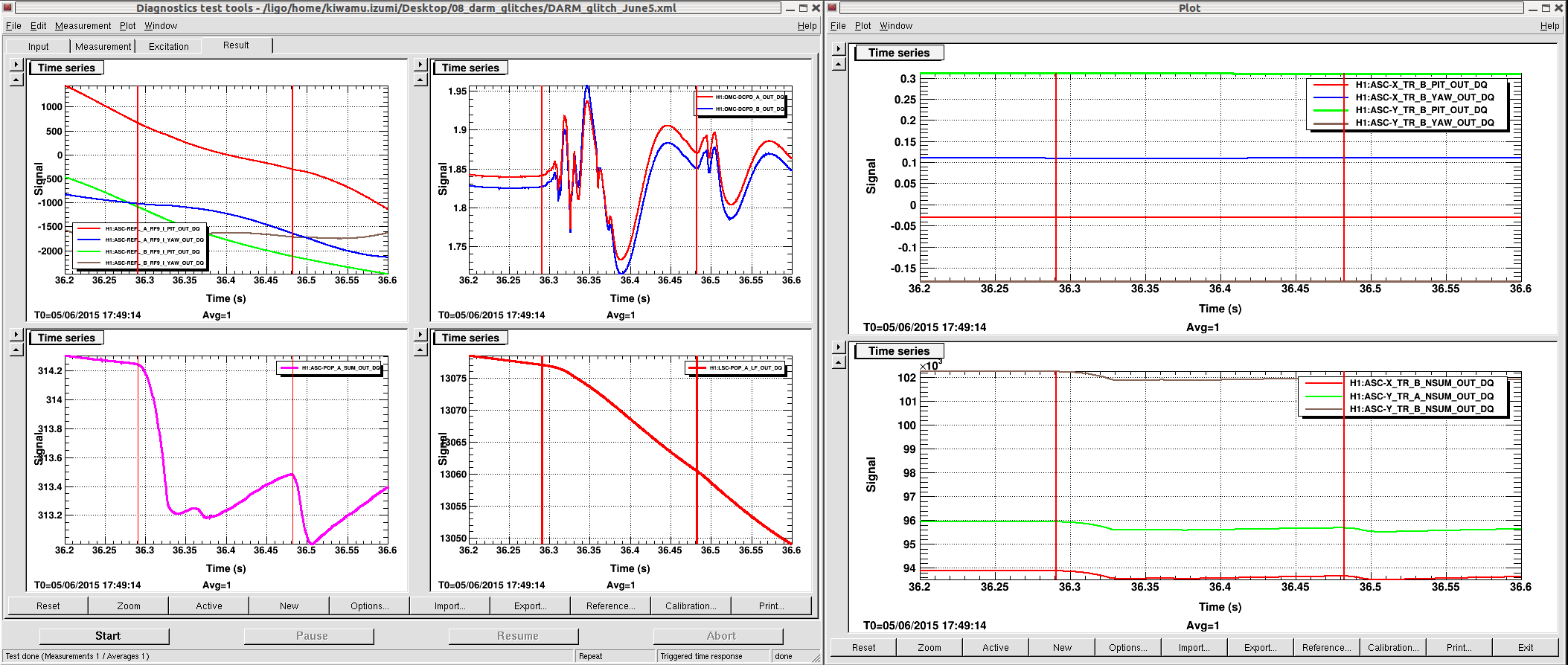

The attached plots show bode plots of the design.

As expected, because of the update to

(a) the overall reduction of actuation scale factor (in [m/ct]), and

(b) the frequency dependence of the actuation function to account for the new PUM / TST cross of ETMY, which is regrettably very feature full,

the filter is significantly more complicated.

The poles and zeros are

toyModel.poles_Hz = [ pair(300,89.9) pair(300,89.9) pair(482,88.5) pair(1040,89.5) pair(3.2e3,0) pair(4e3,51) pair(6.5e3,60)];

toyModel.zeros_Hz = [0.01 0.01 pair(290,89) pair(310,88.9) pair(509,89.5) pair(1030,89.8) pair(3.2e3,70) 6.8e3];

The 300 [Hz] feature are the notches for the beam splitter violin mode (so we don't excite them via DARM), the ~500 and ~1000 [Hz] features are for the actual ETMY violin modes (which now leak through the L3 to L3 transfer function because of the inadequate roll off of the L2 to L3 transfer function), and the features at 2500, 3000, 3500, and 4000 [Hz] (which I did NOT both to include in the inverse filter) are to notch out further harmonics of the ETMY QUAD's violin modes. Finally, the > 4000 [Hz] poles are to roll-off the filter before the Nyquist frequency.

The foton design string is

zpk([0.01;0.01;5.0612+i*289.956;5.0612-i*289.956;5.95121+i*309.943;5.95121-i*309.943;

4.44181+i*508.981;4.44181-i*508.981;3.59537+i*1029.99;3.59537-i*1029.99;1094.46+i*3007.02;

1094.46-i*3007.02;6800],[0.523599+i*300;0.523599-i*300;0.523599+i*300;0.523599-i*300;

12.6173+i*481.835;12.6173-i*481.835;9.0756+i*1039.96;9.0756-i*1039.96;2517.28+i*3108.58;

2517.28-i*3108.58;3250+i*5629.17;3250-i*5629.17;3200;3200],1,"n")gain(1.27879e+09)

where I've forced foton to use a gain of 1.14e17 [ct/m] at 100 [Hz], as shown in the plots, to avoid all the confusion about pole/zero normalization.

"Incorrect" sounds too strong to me.

I would say it was incorrect only in the sense that the cavity pole frequency was uncertain which is the case not only for the 24 W configuration but also for the 17 W. Otherwise we believe that the calibration had remained valid both in GDS and CAL-CS at 24 W (though, rememeber CAL-CS has not fully updated to the equivalent of GDS, alog 1880 and hence the descrepancy between them). The OMC has a power-scaling functionality (alog 18470) and therefore, ideally it does not change the optical gain as we change the PSL power. As for the cavity pole frequency, the Pcal lines should be able to tell us how stable it has been.

As reported in alog 18293, the optical gain seemed to have dropped by 4% in this particualr lock according to the Pcal line at 540 Hz. Sudartian is currently analyzing the Pcal trend, but it seems that the optical gain typically changes by 4-5 % in every lock stretch probably due to different OMC error gain (which is computed in every RF->OMC transition) and perhaps different alignment somewhere. We compensated it by increasing OMC-READOUT_ERR_GAIN by 4 % at the beggining of this particular lock and therefore we thought the calibration was good assuming the cavity pole stayed at the same frequency, 355 Hz.

I suspect there is a lingering source of error in the gain of the OMC-DCPD --> DARM_IN1 path. This may be due to the initial gain-matching calculation between DCPD_SUM and RF-DARM, but it could also be due a scaling error as we adjust the overall gain during the power-up step. We initially set the gain at a DARM offset of ~40pm, but as we power up to 23W we reduce the offset to ~15pm. The current gain-scaling calculation that Kiwamu links to does not account for the small static DARM offset that we have observed (it's a fraction of a picometer, see here). I will post a note about this today -- the overall effect should be very small, but may account for the ~4% change that we have observed. (If this is the source of the gain error it will be proportional to DARM offset, which is the same as power level since we change both at the same time.)