Sheila, Kiwamu, Koji, Evan

Summary

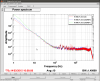

Today the goal was to get back to 34+ Mpc and put as many steps as possible into the Guardian.

Mostly we spent the day trying to get the ASC working again.

Details

ASC

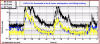

We spent a while puzzling over why the ASB36I→SRM ASC loops were not working in DRMI or in full lock.

Recall that in the new HAM6 centering scheme, ASB is not under active centering control. Over the past week, the beam on ASB has managed to stay near zero, but today it started to wander in the course of locking activities. So it is unsurprising that the ASB36I error signals were junk. (Miraculously, the ASB36Q→BS loops continued to work fine.)

With DRMI locked, we tried moving the ASB picomotor to center the beam. This worked temporarily, but the beam would be miscentered after each relock, or after SRM was moved. Then we tried using ASA36I instead, since ASA is still actively centered. This worked for pitch, but the yaw error signal was not good.

Centering

So finally we just decided to revert to the old HAM6 centering scheme in which OM1 and OM2 are used to center onto ASA and ASB, and OM3 and OMC Sus are used to align onto the OMC QPDs (specifically, we took settings from 2015-03-14 08:00:00 UTC). After that, the ASC worked fine. This isn't a long-term solution, but it is expedient and it seems to indicate that the problem is a lack of centering on ASB.

This "old" HAM6 centering scheme is more or less captured in the Ham6CenteringStatusQuo.snap (attached in zip file). The "new" HAM6 centering scheme is in Ham6CenteringNouveau.snap. Also there are screenshots of both configurations.

Other

BS coil driver switching is now successfully implemented in the Guardian.

We made use of the remote EY ESD driver switching a few times. It is very convenient.