Overall: The main change we are seeing in the LSC and ASC signals with the PR3 and SR3 estimators ON is the lowering of the 1 Hz resonance in ASC, which is known to be a bit of a problem peak.

We've been wanting times within the same lock where we take quiet times with the SR3 and PR3 estimators in various ON/OFF configurations so we can get a better look at possible differences in ISC signals, since the difference we are looking for, if any, is very small and the frequency response changes from lock to lock. We finally were able to get some time on November 24th. I made sure to get two sets of ALL ON times, one at the beginning and one at the end, and then also two sets of ALL OFF times, similarly one at the beginning and one at the end, to get a better idea of what changes between the two are really due to the estimator configurations.

Times:

SR3, PR3 Est ALL ON (one set before & one set after other sets)

start: 1448060052

end: 1448060452

start: 1448063252

end: 1448063616

SR3, PR3 Est ALL OFF (one set before & one set after other sets)

start: 1448060469

end: 1448060977

start: 1448063631

end: 1448063846

SR3, PR3 Est JUST L

start: 1448060995

end: 1448061621

SR3, PR3 Est JUST P

start: 1448061643

end: 1448062049

SR3, PR3 Est JUST Y

start: 1448062067

end: 1448062407

SR3, PR3 Est LP

start: 1448062846

end: 1448063239

SR3, PR3 Est PY

start: 1448062423

end: 1448062828

I used my script /ligo/svncommon/SusSVN/sus/trunk/Common/MatlabTools/estimator_ISC/estimator_isc_comparison.m, to plot ASDs for LSC (CTRL) and ASC (ERR) channels, as well as the LSC CAL channels and ASC diode channels when an ASC channel was made up of more than one diode (ex. INP1 uses REFL A 45I and REFL B 45I). I very quickly realized that the difference between each individual dof and LP or PY ON vs OFF is not enough of a change to be able to accurately say whether there is a difference. So we're just going to look at all estimator dofs ON vs all OFF. These result plots can be found in /ligo/svncommon/SusSVN/sus/trunk/Common/MatlabTools/estimator_ISC/H1/Results/ as revision r12809. On the plots, Light Green 'ON' is next to Dark Green 'OFF' in time, and Light Blue 'ON' is next to Dark Blue 'OFF' in time, so Light vs Dark Green and Light vs Dark Blue are useful for proving drops in noise when the estimator is turned on.

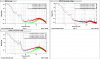

LSC

LSC, LSC-CAL (Length, Length Zoomed in)

- Don't show much improvement

- Maybe MICH at 1 Hz has improved a bit with the estimators ON

- PRCL shows slight decrease in noise between 3.5-6 Hz with the estimators ON

ASC

PITCH

REFL Diodes ASC - INP1, CHARD Pitch (would be affected by PR3):

- Don't show much improvement

- INP1 P shows slightly lower noise between 2.5-3.5 Hz

- Similar to what was seen in INP1 P OUT in August: 86640

- Very small decrease in 1 Hz peak in CHARD

- ASC-REFL_A_RF45_I_PIT sees a small decrease at 1 Hz while ASC-REFL_B_RF45_I_PIT does not see anything

AS Diodes ASC - MICH, SRC1, SRC2, DHARD Pitch (would be affected by SR3):

- Improvement seen in the 1 Hz resonance by 1.5-2x (except SRC1)

POP Diodes ASC - PRC1, PRC2 Pitch (would be affected by PR3):

- PRC1 P sees slight improvement seen in the 1 Hz resonance

YAW

REFL Diodes ASC - INP1, CHARD Yaw (would be affected by PR3):

- Don't show much improvement

- CHARD Y sees slight decrease betweeen 4-6 Hz with the estimators ON

AS Diodes ASC - MICH, SRC1, SRC2, DHARD Yaw (would be affected by SR3):

- Don't show much improvement

POP Diodes ASC - PRC1, PRC2 Yaw (would be affected by PR3)

- PRC2 Y sees slight decrease betweeen 4-6 Hz with the estimators ON

- Similar to what was seen in PRC2 Y OUT in August: 86640

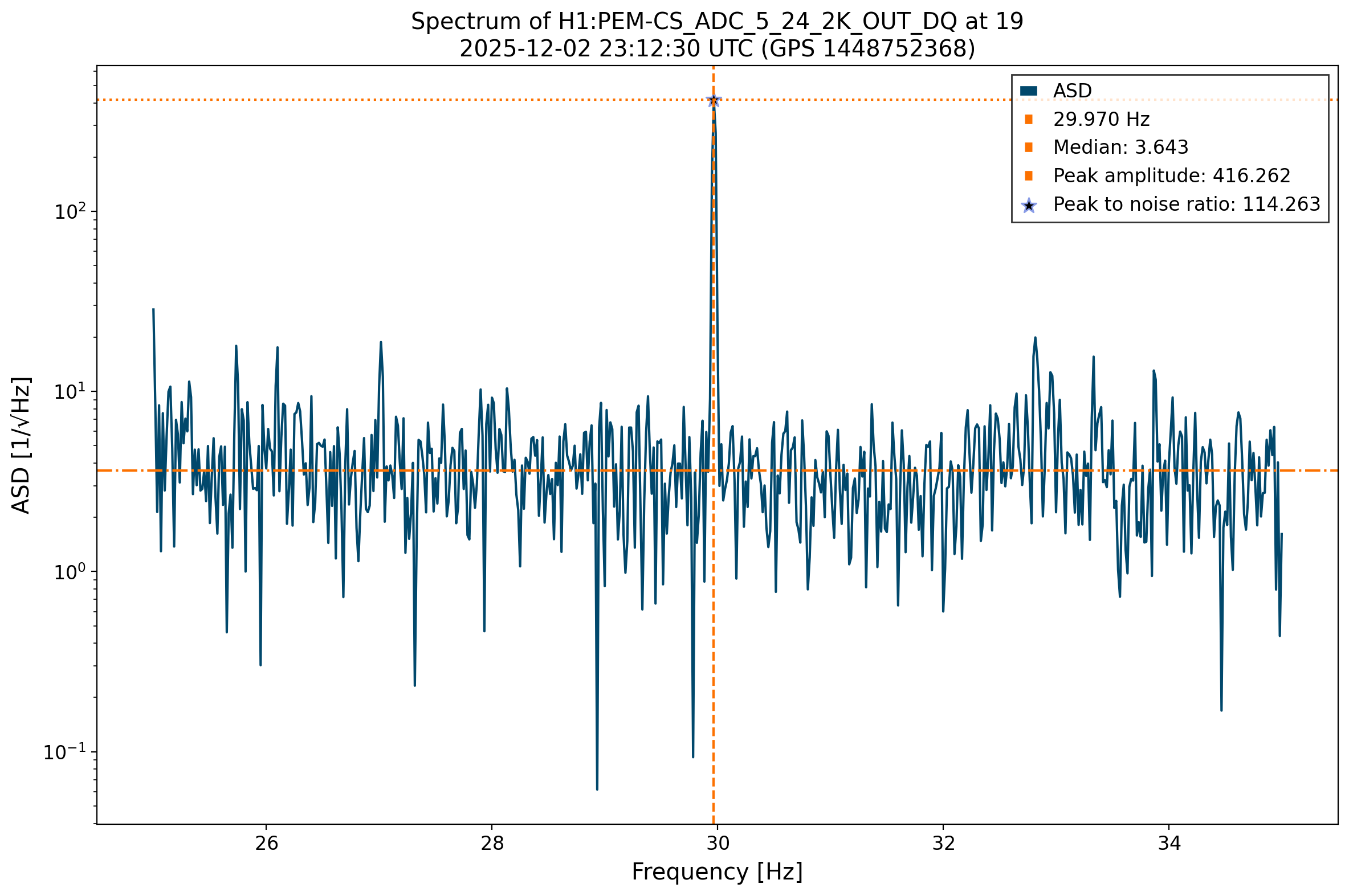

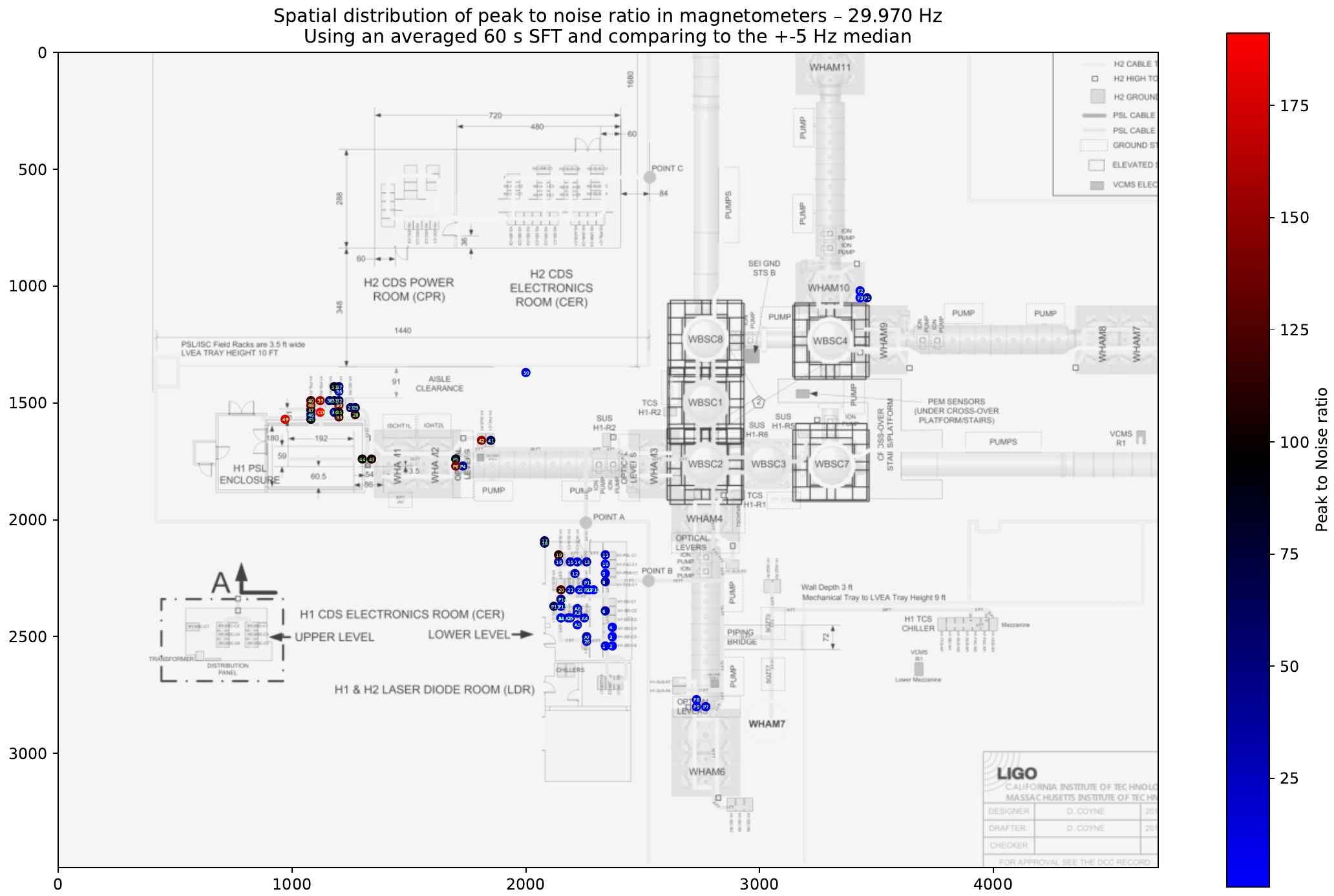

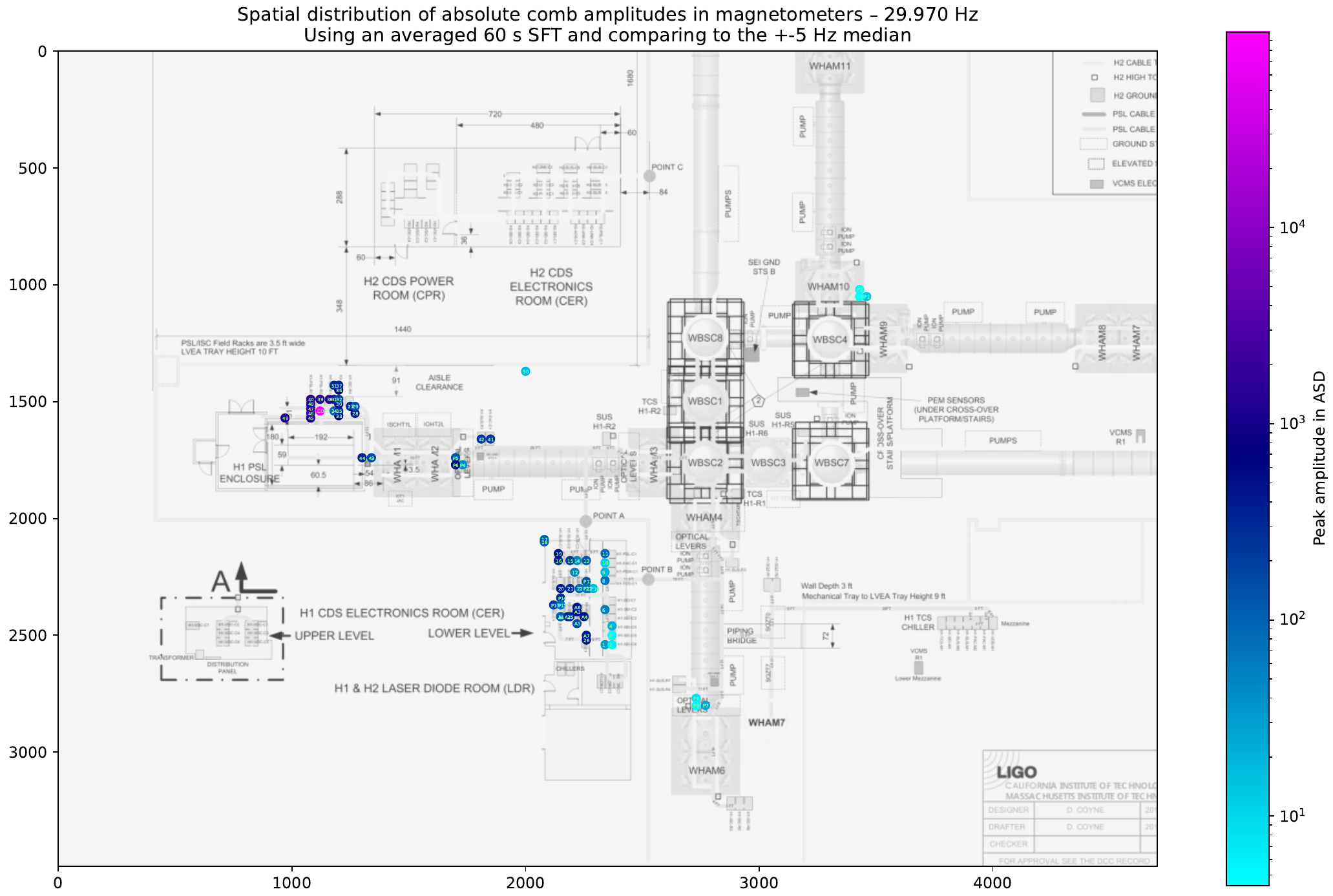

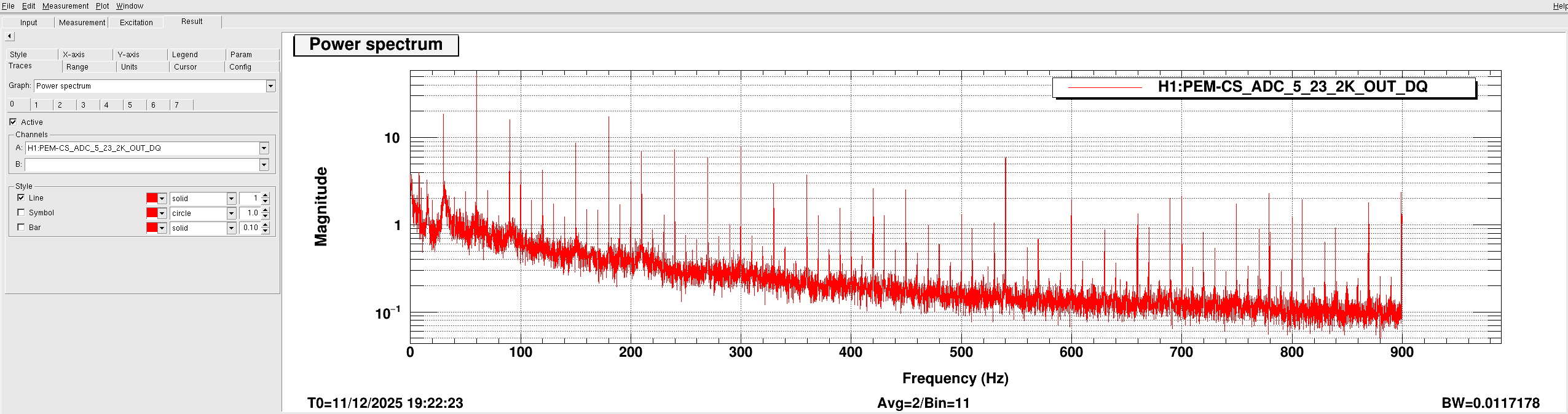

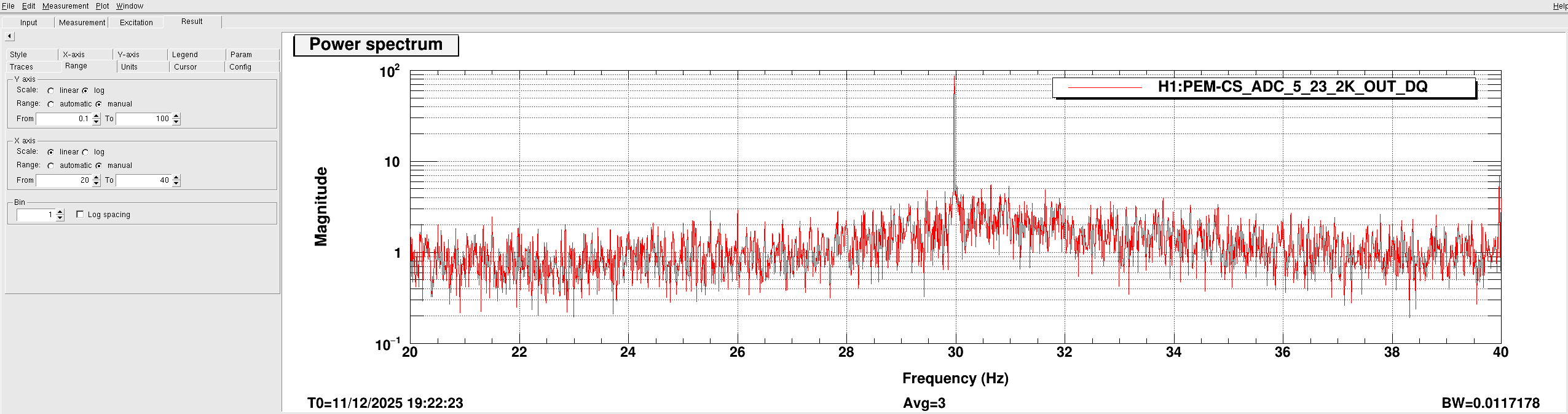

That is, we compute the ASD using 60s FT and check the amplitude of the ASD at the frequency of the first harmonic of the largest of the near-30 Hz combs, the fundamental at 29.9695 Hz. Then, we compute the median of the +- 5 surrounding Hz and save the ASD value at 29.9695 Hz "peak amplitude" and the ratio of the peak against the median to have a sort of "SNR" or "Peak to Noise ratio".

Note that we also check the permanent magnetometer channels. However, in order to compare them to the rest, we multiplied the ASD of the magnetometers that Robert gave us times a hundred so that all of them had units of Tesla.

After saving the data for all the positions, we have produced the following two plots. The first one shows the peak to noise ratio of all positions we have checked around the LVEA and the electronics room:

That is, we compute the ASD using 60s FT and check the amplitude of the ASD at the frequency of the first harmonic of the largest of the near-30 Hz combs, the fundamental at 29.9695 Hz. Then, we compute the median of the +- 5 surrounding Hz and save the ASD value at 29.9695 Hz "peak amplitude" and the ratio of the peak against the median to have a sort of "SNR" or "Peak to Noise ratio".

Note that we also check the permanent magnetometer channels. However, in order to compare them to the rest, we multiplied the ASD of the magnetometers that Robert gave us times a hundred so that all of them had units of Tesla.

After saving the data for all the positions, we have produced the following two plots. The first one shows the peak to noise ratio of all positions we have checked around the LVEA and the electronics room:

Where the X and Y axis are simply the image pixels. The color scale indicates the peak to noise ratio of the magnetometer in each position. The background LVEA has been taken from

Where the X and Y axis are simply the image pixels. The color scale indicates the peak to noise ratio of the magnetometer in each position. The background LVEA has been taken from  Note that in this figure, the color scale is logarithmic. It can be seen how, looking at the peak amplitudes, there is one particular position in the H1-PSL-R2 rack whose amplitude is around 2 orders of magnitude larger than the other positions. Note that this position also had the largest peak to noise ratio.

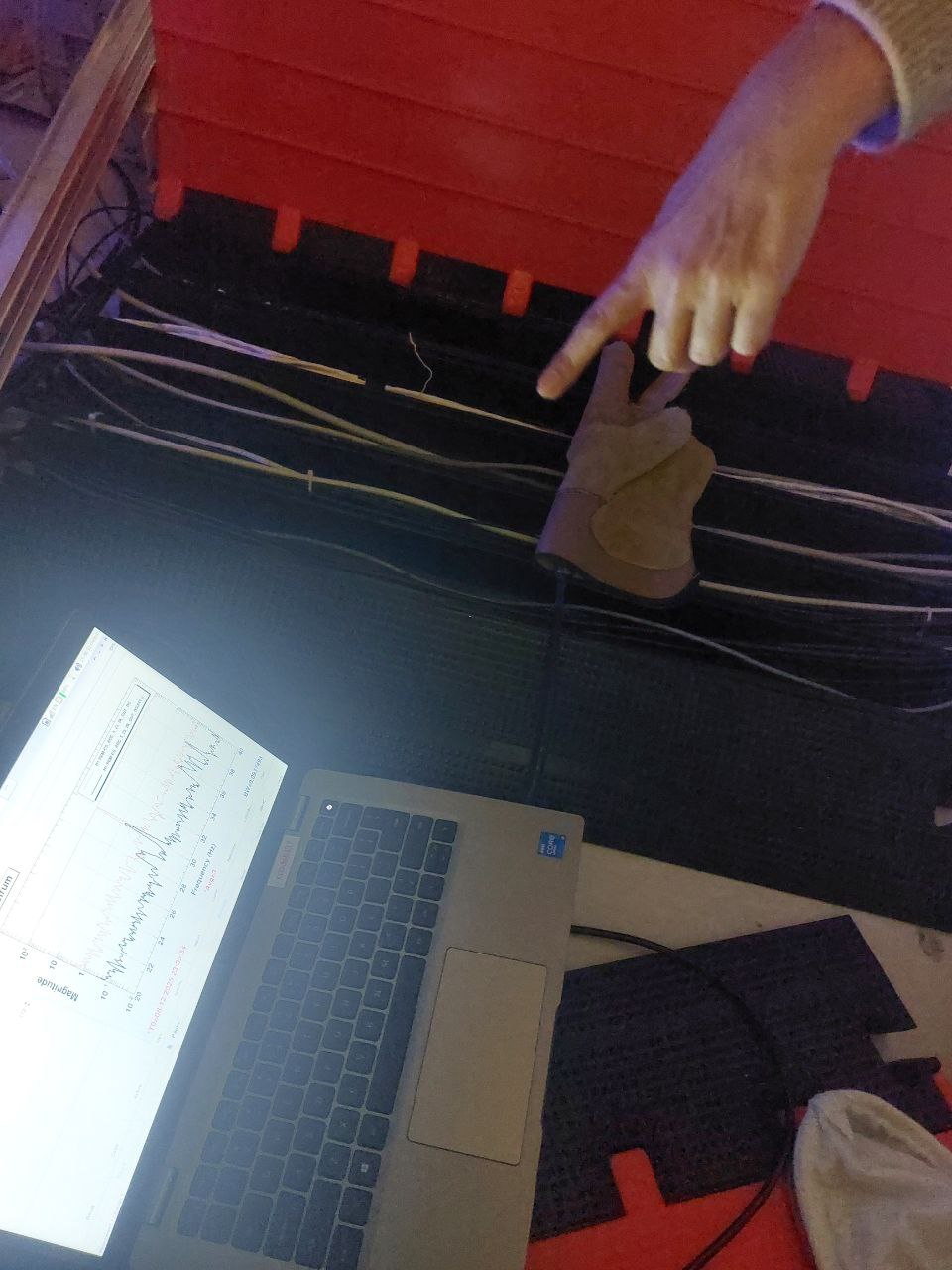

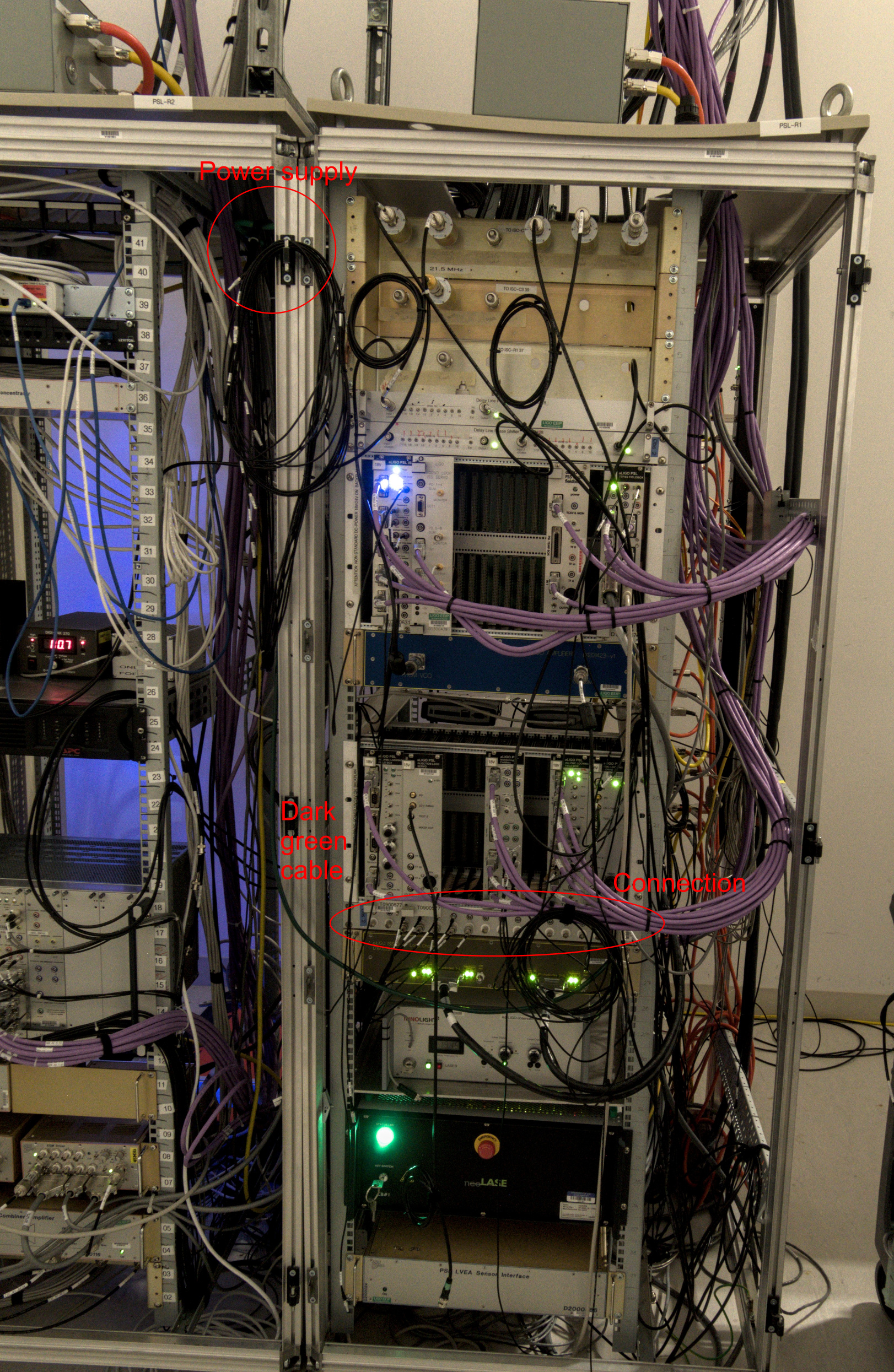

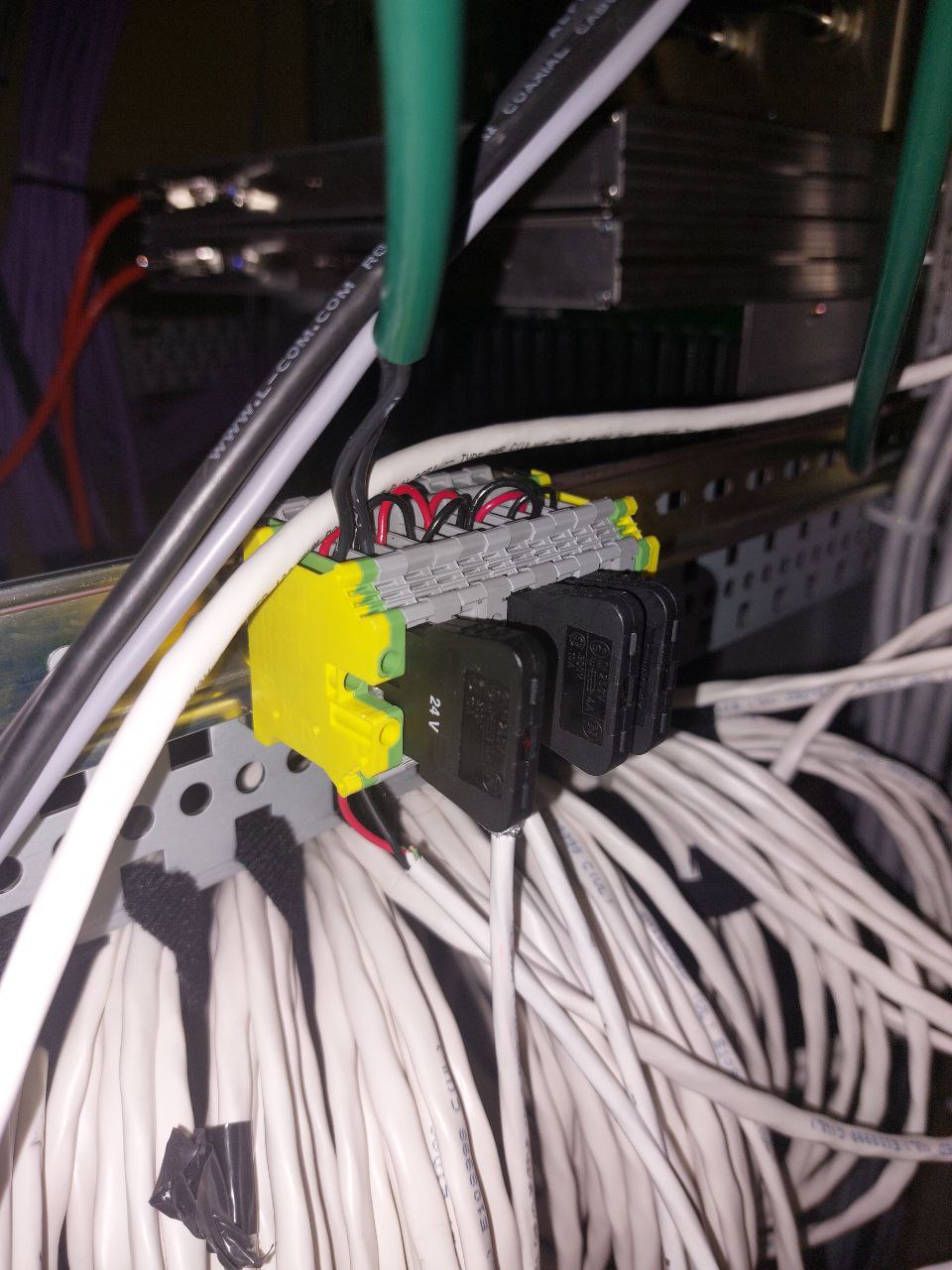

This position, that we have tagged as "Coil", is putting the magnetometer into a coil of white cables behind the H1-PSL-R2 rack, as shown in this image:

Note that in this figure, the color scale is logarithmic. It can be seen how, looking at the peak amplitudes, there is one particular position in the H1-PSL-R2 rack whose amplitude is around 2 orders of magnitude larger than the other positions. Note that this position also had the largest peak to noise ratio.

This position, that we have tagged as "Coil", is putting the magnetometer into a coil of white cables behind the H1-PSL-R2 rack, as shown in this image:

The reason that led us to put the magnetometer there is that we also found the peak amplitude to be around 1 order of magnitude larger than on any other magnetometer on top of one set of white cables that go from inside the room towards the rack and up towards we are not sure where:

The reason that led us to put the magnetometer there is that we also found the peak amplitude to be around 1 order of magnitude larger than on any other magnetometer on top of one set of white cables that go from inside the room towards the rack and up towards we are not sure where:

This image shows the magnetometer on top of the cables on the ground behind the H1-PSL-R2 rack, the white ones on the top of the image appear to show the peak at its highest. It could be that the peak is louder in the coil because there being so many cables in a coil distribution will generate a stronger magnetic field.

This is the actual status of the hunt. These white cables might indicate that the source of these combs is the different interlocking system in L1 and H1, which has a chassis in the H1-PSL-R2 rack. However, we still need to track down exactly these white cables and try turning things on and off based on what we find in order to see if the combs dissapear.

This image shows the magnetometer on top of the cables on the ground behind the H1-PSL-R2 rack, the white ones on the top of the image appear to show the peak at its highest. It could be that the peak is louder in the coil because there being so many cables in a coil distribution will generate a stronger magnetic field.

This is the actual status of the hunt. These white cables might indicate that the source of these combs is the different interlocking system in L1 and H1, which has a chassis in the H1-PSL-R2 rack. However, we still need to track down exactly these white cables and try turning things on and off based on what we find in order to see if the combs dissapear.

So it may be that these lines may be transmitted elsewhere through this power supply.

We connected a voltage divider and connected it to the same channel we were using for the magnetometer (H1:PEM-CS_ADC_5_23_2K_OUT_DQ):

So it may be that these lines may be transmitted elsewhere through this power supply.

We connected a voltage divider and connected it to the same channel we were using for the magnetometer (H1:PEM-CS_ADC_5_23_2K_OUT_DQ):

Out of this power supply, two dark green cables come out, the first one goes to the H1-PSL-R1 rack:

Out of this power supply, two dark green cables come out, the first one goes to the H1-PSL-R1 rack:

However, the comb did not appear as strong when we put the magnetometer besides the chassis where the cable leads.

On the other hand, the comb does appear strong if we follow the other dark green cable, that goes to this object

However, the comb did not appear as strong when we put the magnetometer besides the chassis where the cable leads.

On the other hand, the comb does appear strong if we follow the other dark green cable, that goes to this object

Which Jason told us it may be related to the interlock system.

Following the white cables that go from this object, it would appear that they go into the coil, where we saw that the comb was very strong.

We think it would be interesting to see what here can be turned off and see if the comb does disappear.

Which Jason told us it may be related to the interlock system.

Following the white cables that go from this object, it would appear that they go into the coil, where we saw that the comb was very strong.

We think it would be interesting to see what here can be turned off and see if the comb does disappear.