The C00 vs. C01 comparison pages have been updated and include all C01 data between 2016-11-30 0:00 and 2017-06-20 0:00.

As part of this analysis, I've got updated PCAL-to-DARM ratio trends at 36.7, 331.9 and 1083.7 Hz. Plots of both magntidue and phase residual timeseries trends are attached. As a reminder, these trends are computed by demodulating 300-second segments at each PCAL line frequency, then averaging to get a magnitude and phase out of each channel. In practice, I break up each 300-second segment into overlapping 100-second chunks, apply a Kaiser window, and then average over chunks to get a less noisy measurement. (It's basically Welch's method, except I'm demodulating rather than computing a PSD.) Finally, I remove outliers that are due to locklosses and loud transient glitches.

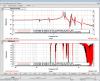

In each plot below I show PCAL/strain trends for C00 (blue) and C01 (red) data. The trend shown in aquamarine applies a correction for f_cc to C01 data at the appropriate PCAL line frequency. Gray shaded regions correspond to times when we had a break in O2, once during the holiday season and once for commissioning work. This is identical to a similar study done on Livingston data; see L1 aLog 35102.

You can see that in the bucket (331.9 Hz) we continue to have no systematics and only very small (~1%) statistical fluctuation when we correct for f_cc. At high frequency (1083.7 Hz) there's a slight systematic offset in magnitude and phase, but it's at the level of 2% or so which is consistent with Craig's error budget. At low frequency (36.7 Hz) there's a 3-4% systematic offset since we came back after the break on June 8 that isn't accounted for by f_cc, but I'll bet it's due to optical spring detuning. The offset also shows up in ASD ratio spectra; see the DetChar summary page from the first day after break. I have it as an action item to look into this in the next few days, so stay tuned!

I'd forgotten to post about the OMCS data I took on 2017-07-25 as well. The data lives here: /ligo/svncommon/SusSVN/sus/trunk/OMCS/H1/OMC/SAGM1/Data/ 2017-07-25_1812_H1SUSOMC_M1_WhiteNoise_L_0p02to50Hz.xml 2017-07-25_1812_H1SUSOMC_M1_WhiteNoise_P_0p02to50Hz.xml 2017-07-25_1812_H1SUSOMC_M1_WhiteNoise_R_0p02to50Hz.xml 2017-07-25_1812_H1SUSOMC_M1_WhiteNoise_T_0p02to50Hz.xml 2017-07-25_1812_H1SUSOMC_M1_WhiteNoise_V_0p02to50Hz.xml 2017-07-25_1812_H1SUSOMC_M1_WhiteNoise_Y_0p02to50Hz.xml Detailed plots now attached, and they show that OMC is clear of rubbing; the data looks as it has for past few years, and what difference we see between LHO and LLO are the lower-stage Pitch modes which are arbitrarily influence by ISC electronics cabling running down the chain (as we see for the reaction masses on the QUADs).