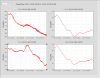

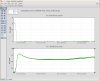

A few weeks ago, Hugh switched the end station BSC Z blends to 45 mhz. We've done a bit of looking at this, and it seems okay, so this morning I switched the CS BSCs to 45s on Z as well. First attached plot shows the improvement in the Z direction on ITMX, the BS and ITMY were very similar so I'm not posting them. The green lines are the ST1 T240 ASD (solid) and RMS (dashed) with the Quite_90 blend, brown is the T240 ASD (solid) and RMS (dashed) with the 45 mhz blends. Purple is the ground from when the 90mhz blends were engage, black is the ground with 45mhz. We were getting a factor of 3 to 5 suppression of the microseism, with the 45 mhz blends we get a factor of ~40. There is some increased motion in the 20-80 mhz region, but the RMS of the ISI is still lower.

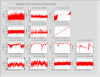

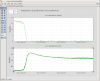

One possible problem with running the 45 mhz blends in Z is increased coupling to St1 RZ. I've looked at the local ISI sensors, and this doesn't seem to be affecting us yet. In fact, the T240s (which are suspect because of Z/RZ coupling) and the CPSs both show less motion at the microseism with the 45mhz blends, second plot, red and green are CPS and T240 RZ with 90mhz blends, blue and brown are CPS and T240 RZ with 45mhz blends. Additionally, The RZ drive doesn't seem to be significantly different, implying that we aren't contaminating RZ with increased Z drive (third plot, pink is with the 90mhz blends, light blue is with the 45mhz blends). After we relock today, I'll try to do a comparison with the ASC controls from last night.

This is the same configuration the LLO runs on their BSCs. Arnaud made this change a while ago, Hugh and I just took our time catching up...