Ivey used the ISO calibration measurements that I took earlier (85906) to calculate what the OSEMINF gains should be on SR3 (85907), and this script also calculates what it thinks the compensation gain in the DAMP filter bank should be.

The next step is to use OLG TFs to measure what values we would use in the DAMP filter bank to compensate for the change in OSEMINF gains, and we can compare them to the calculated values to see how close they are.

I took two sets of OLG measurements for SR3:

- a set with the nominal OSEMINF gains

T1: 1.478

T2: 0.942

T3: 0.952

LF: 1.302

RT: 1.087

SD: 1.290

- a set with the OSEMINF gains changed to the values in 85907

T1: 3.213

T2: 1.517

T3: 1.494

LF: 1.733

RT: 1.494

SD: 1.793

Measurement settings:

- SR3 in HEALTH_CHECK but with damping loops on

- SR3 damping nominal (all -0.5)

- HAM5 in ISOLATED

Nominal gain set:

/ligo/svncommon/SusSVN/sus/trunk/HLTS/H1/SR3/SAGM1/Data/2025-07-22_1700_H1SUSSR3_M1_WhiteNoise_{L,T,V,R,P,Y}_0p02to50Hz_OpenLoopGainTF.xml r12478

New gain set:

/ligo/svncommon/SusSVN/sus/trunk/HLTS/H1/SR3/SAGM1/Data/2025-07-22_1800_H1SUSSR3_M1_WhiteNoise_{L,T,V,R,P,Y}_0p02to50Hz_OpenLoopGainTF.xml r12478

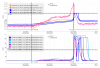

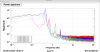

Once I had taken these measurements, I exported txt files for each dof's OLG and used one of my scripts, /ligo/svncommon/SusSVN/sus/trunk/HLTS/Common/MatlabTools/divide_traces_tfs.m to plot the OLG for each dof to compare the traces between OSEMINF gain differences and then divide the traces and grab an average of that, which will be the compensation gain put in as a filter in the DAMP filter bank (plots). The values I got for the compensation gains are below:

L: 0.740

T: 0.732

V: 0.548

R: 0.550

P: 0.628

Y: 0.757

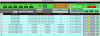

| DOF |

OLTF measured and calculated DAMP Compensation gains |

ISO Calibration measurement calculated compensation gains (85907) |

Percent difference (%) |

| L |

0.740 |

0.740 |

0.0 |

| T |

0.732 |

0.719 |

1.8 |

| V |

0.548 |

0.545 |

0.5 |

| R |

0.550 |

0.545 |

0.9 |

| P |

0.628 |

0.629 |

0.2 |

| Y |

0.757 |

0.740 |

2.3 |

These are pretty similar to what my script had found them to be last time before the satamp swap (85288), as well as being very similar to the values that Ivey's script had calculated.

Maybe the accuracy from Ivey's script means that in the future we don't need to run the double sets of OLG transfer functions and can jsut use the values that the script gives.

The M1 to M1 TFs were supposed to be regular TFs so here is the alog for those: 86202