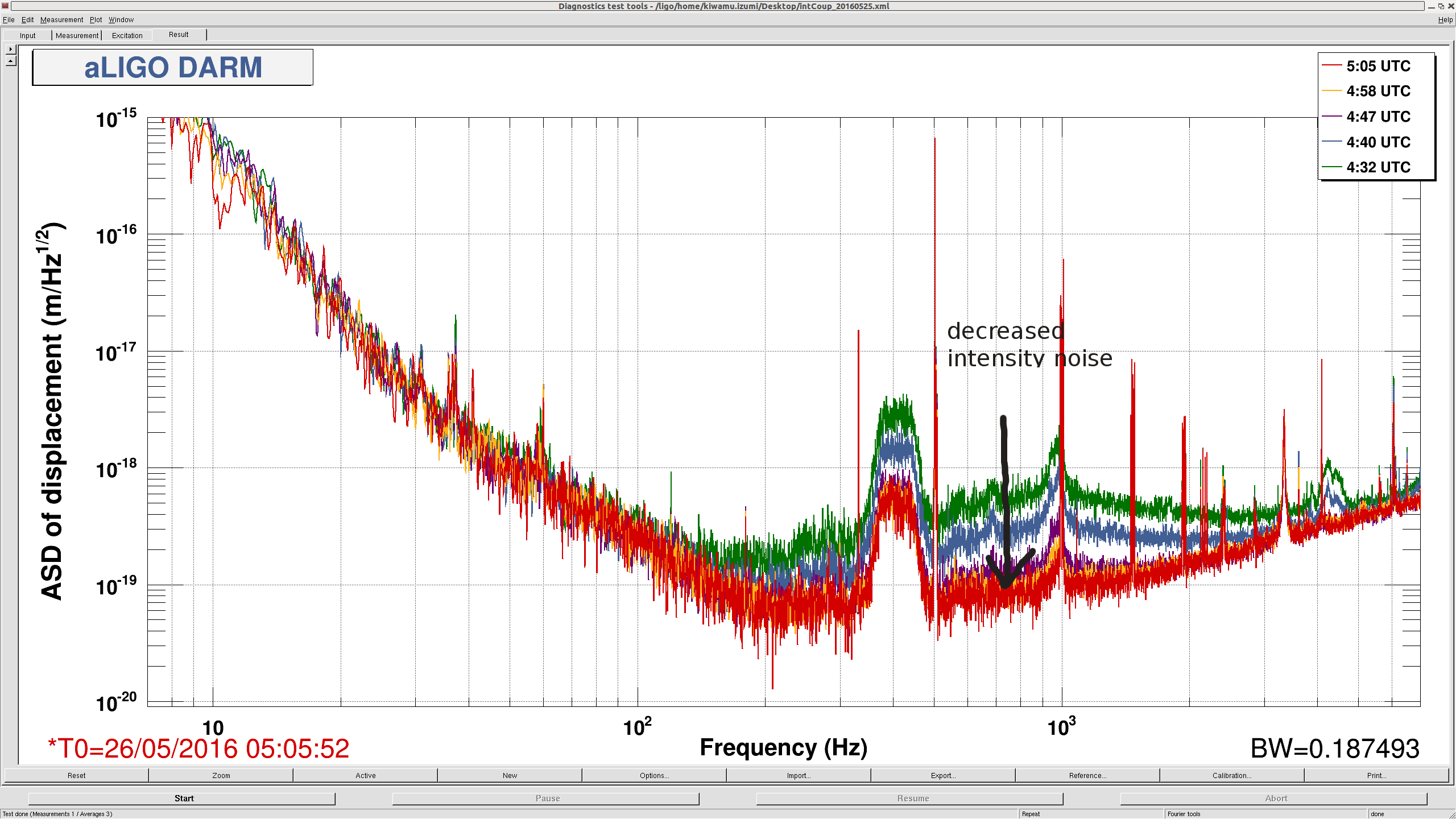

Last night, we ran a quick TCS test where we attempted to minimize the intensity noise coupling to the DCPDs by changing the CO2 differential heating.

It seems that the following CO2 setting gives a much better intensity noise coupling when the PSL power is 25 W:

This did not improve the recycling gain so much. It seems to have increased by 2% only.

According to a past measurement with a lower power PSL of 2 W (alog 26264), a good differential CO2 power had been found to be P_{co2x} - P_{co2y} = 270 mW (or probably less than 270 mW because I did not explore the lower differential power).

This could be an indication that ITMY has a larger absorption for the 1064 nm light such that the differential self-heating linearly changes as a function of the PSL power. We should confirm this hypothesis using the HWS signals.

[The test]

No second or third loops engaged, DC readout, no SRC1 ASC loop.

-

3:34 UTC, full resonance with 2 W PSL

-

4:12 UTC, PSL --> 25 W

-

4:24 UTC, CO2Y 260 mW --> 0 mW

-

4:49 UTC, PSL --> 22 W for letting the 0.5 Hz instability go away

-

4:56 UTC, CO2X 480 mW --> 790 mW

-

5:12 UTC, lock loss

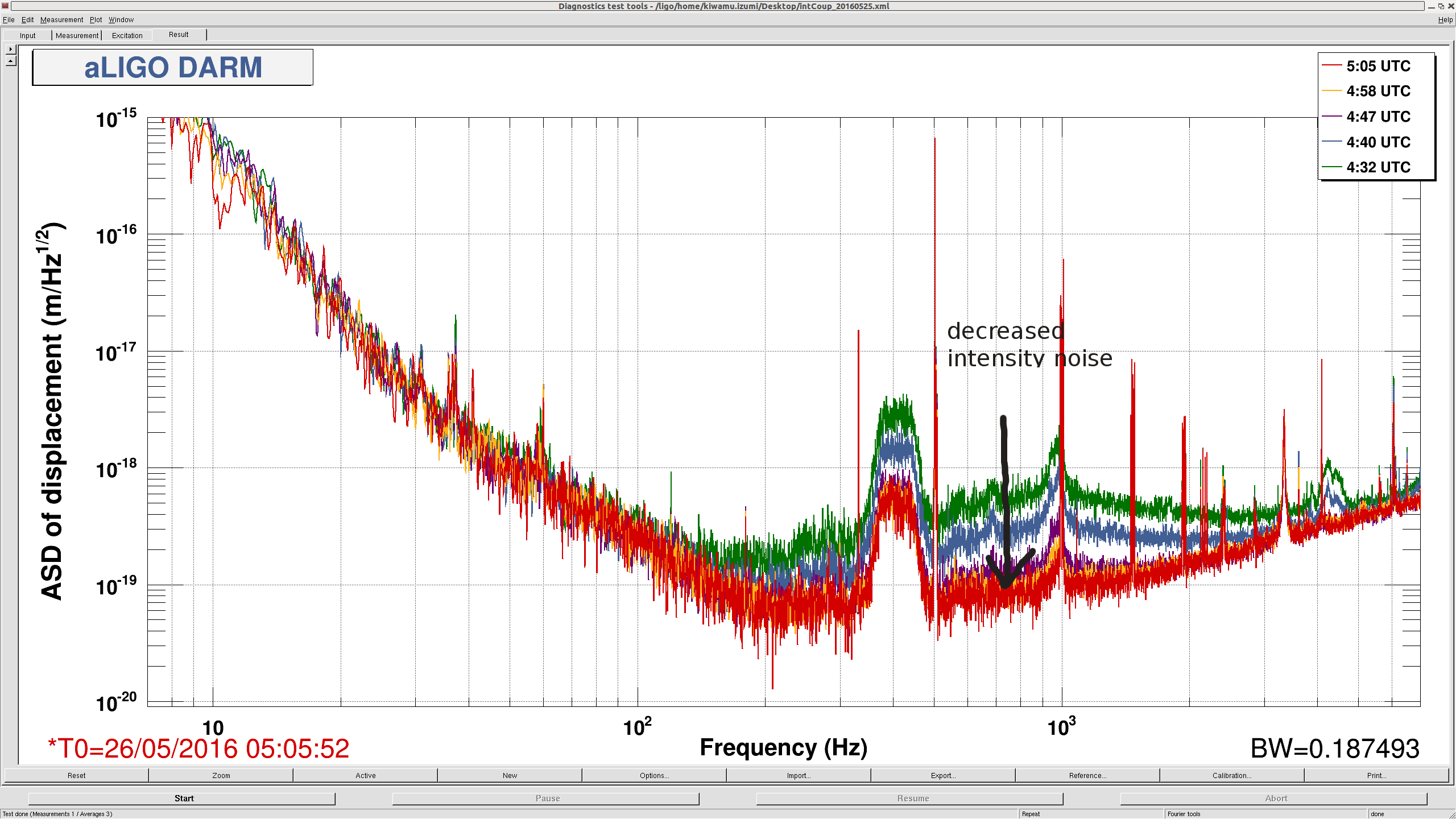

Drastic reduction of the intensity noise coupling was observed mostly between 4:24 and approximately 5:00, indicating that reducing the CO2Y power helped improved the coupling. After 5:00 UTC, we did not see a significant reduction. This may mean that we might have been already close to an optimum point where the coupling is minimized. The attached shows DARM spectra from various time during the test.

A broad peak at around 400 Hz is my intentional excitation to the first loop with band-passed gaussian noise in order to check the coupling to the DCPDs. As shown in the spectra, the reduction from the beginning to the end of the test is about a factor of 5. As reported in 27370, broad noise above 100 Hz up to several kHz is indeed intensity noise and therefore we see the noise floor in this frequency band decreasing too.