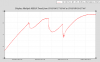

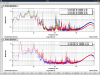

Here is another snapshot of Y-arm pressures over five days. We don't see a response in Y2 from changes in CP4 temperature, but Y1 side trends closely with CP4. GV11 (nearer to mid-station) has an outer o-ring leak in gate, but we evacuated this annulus and valved out. GV12 (Y2 boundary valve) has both inner and outer o-ring leaks on gate - also evacuated and valved out.

When BSC6 was replaced with spool at mid-Y several years ago, GV11 was exposed to air and the spool was not baked after installation (was it baked at factory?), so we expect outgassing from these components during the CP4 bake, but the sharp cuffs in Y1 pressure are not what we would predict with temperature changes in steel.

CP4 air temp trend over five days in enclosure is also attached.

The replacement spool was baked at GNB but not after installation. The same is true for the MC tubes in the LVEA which were part of the same procurement for aLIGO.