Ansel Neunzert, Pat Meyers

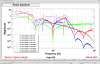

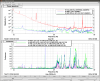

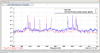

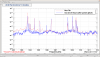

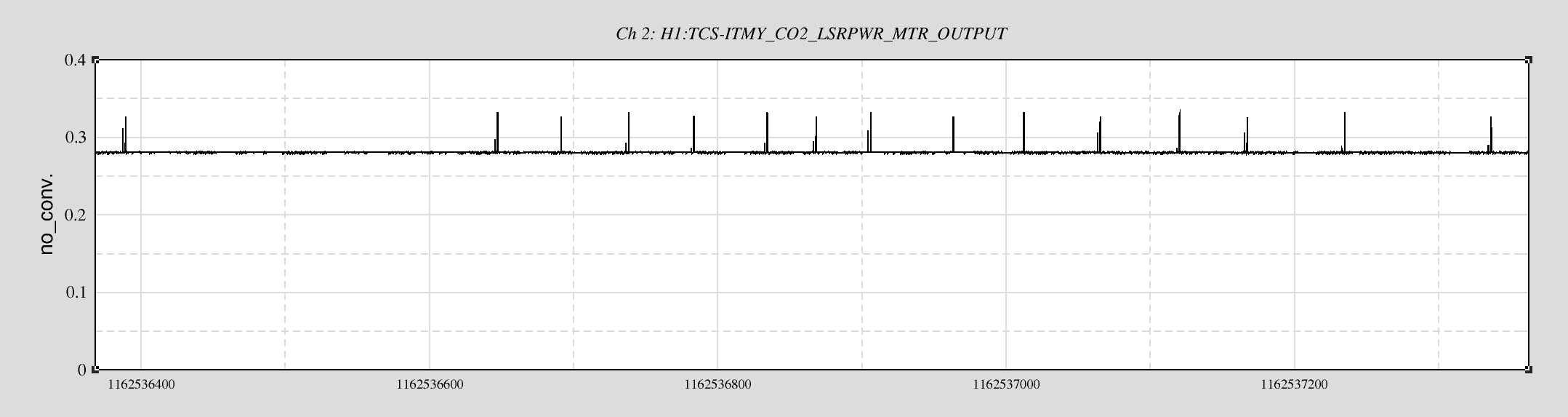

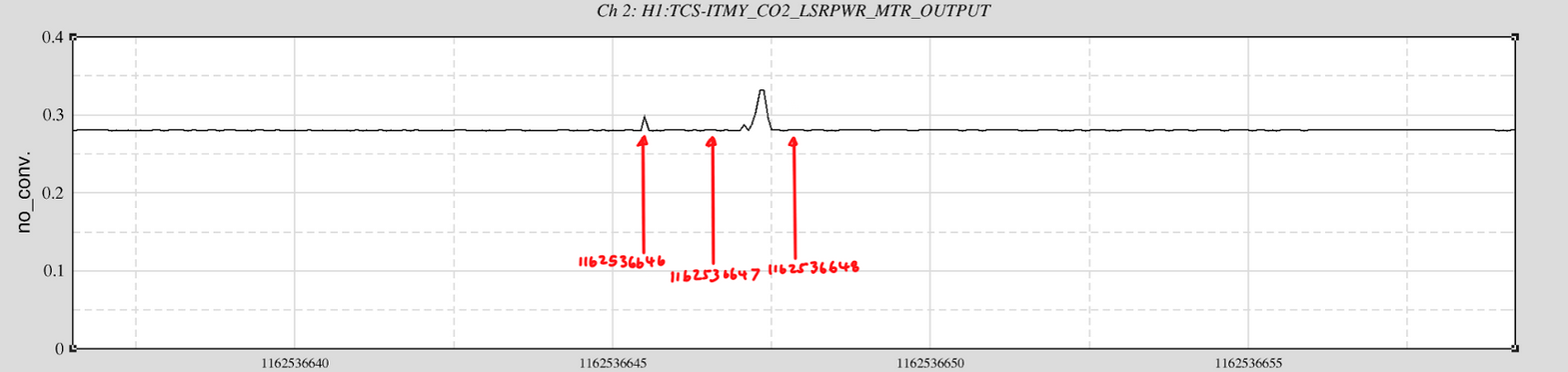

We see a strong 11+/- 0.0001 Hz comb show up in DARM in the lock on November 5th [first harmonic in plot: DARM_11_5_2016.png ]. It is very strongly coherent with quite a few corner station channels including magnetometers, microphones, and radio receiver [see plot 2, for example].

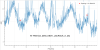

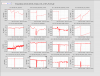

It looks like this comb shows up in the CS magnetometers around 01:00 - 02:00 UTC on Saturday, November 5th [see plots 3-6, red dots indicate n*11.0Hz] There are links to interactive plots below, as well.

We don't think this is related to the PEM injections, which took place later in the day, but there was some HWS work around that time (although it sounds like that is an unlikely source).

There's no evidence of this comb in the lock from today (November 7th).

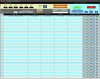

——————— stamp-pem results —————————

[Coherence * Number of averages is color, rows are channels, columns are 0.1 Hz frequency bins]

https://ldas-jobs.ligo-wa.caltech.edu/~meyers/stamppemtest/HTML/day/20161105/Physical%20Environment%20Monitoring:%20Magnetometers.html

https://ldas-jobs.ligo-wa.caltech.edu/~meyers/stamppemtest//HTML/day/20161105/Physical%20Environment%20Monitoring:%20Low%20frequency%20microphones.html

https://ldas-jobs.ligo-wa.caltech.edu/~meyers/stamppemtest//HTML/day/20161105/Physical%20Environment%20Monitoring:%20Radio%20frequency%20recievers.html

————————— Interactive plots —————————————