J. Kissel

I'm in a rabbit hole, and digging my way out by shaving yaks. The take-away if you find this aLOG TL;DR -- This is an expansion of the understanding of one part of multi-layer problem described in LHO:72622.

I want to pick up where I left off in modeling the detector calibration's response to thermalization except using the response function, (1+G)/C, instead of just the sensing function, C (LHO:70150).

I need to do this for when

(a) we had thermalization lines ON during times of

(b) PSL input power at 75W (2023-04-14 to 2023-06-21) and

(c) PSL input power at 60W (2023-06-21 to now).

"Picking up where I left off" means using the response function as my metric of thermalization instead of the sensing function.

However, the measurement of sensing function w.r.t. to its model, C_meas / C_model, is made from the ratio of measured transfer functions (DARM_IN1/PCAL) * (DARMEXC/DARMIN2), where only the calibration of PCAL matters. The measurement response function w.r.t. its model, R_meas / R_model, on the other hand, is ''simply'' made by the transfer function of ([best calibrated product])/PCAL, where the [best calibrated product] can be whatever you like, as long as you understand the systematic error and/or extra steps you need to account for before displaying what you really want.

In most cases, the low-latency GDS pipeline product, H1:GDS-CALIB_STRAIN, is the [best calibrated product], with the least amount of systematic error in it. It corrects for the flaws in the front-end (super-Nyquist features, computational delays, etc.) and it corrects for ''known'' time dependence based on calibation-line informed, time-dependent correction factors or TDCFs (neither of which the real-time front-end product, CAL-DELTAL_EXTERNAL_DQ, does). So I want to start there, using the transfer function H1:GDS-CALIB_STRAIN / H1:CAL-DELTAL_REF_PCAL_DQ for my ([best calibrated product])/PCAL transfer function measurement.

HOWEVER, over the time periods when we had thermalization lines on, H1:GDS-CALIB_STRAIN had two major systematic errors in it itself that were *not* the thermalization. In short, those errors were:

(1) between 2023-04-26 and 2023-08-07, we neglected to include the model of the ETMX ESD driver's 3.2 kHz pole (see LHO:72043) and

(2) between 2023-07-20 and 2023-08-03, we installed a buggy bad MICH FF filter (LHO:71790, LHO:71937, and LHO:71946) that created excess noise as a spectral feature which polluted the 15.1 Hz, SUS-driven calibration line that's used to inform \kappa_UIM -- the time dependence of the relative actuation strength for the ETMX UIM stage. The front-end demodulates that frequency with a demod called SUS_LINE1, creating an estimate of the magnitude, phase, coherence, and uncertainty of that SUS line w.r.t. DARM_ERR.

When did we have thermalization lines on for 60W PSL input? Oh, y'know, from 2023-07-25 to 2023-08-09, exactly at the height of both of these errors. #facepalm

So -- I need to understand these systematic errors well in order to accurately remove them prior to my thermalization investigation.

Joe covers both of these flavors of error in LHO:72622.

However, after trying to digest latter problem, (2), and his aLOG, I didn't understand why spoiled \kappa_U alone had such impact -- since we know that the UIM actuation strength is quite unimpactful to the response function.

INDEED (2) is even worse than "we're not correcting for the change in UIM actuation strength -- because

(3) Though the GDS pipeline (that finishes the calibration to form H1:GDS-CALIB_STRAIN) computes its own TDCFs from the calibration lines, GDS gates the value of its TDCFs with the front-end-, CALCS-, computed uncertainty. So, in that way, the GDS TDCFs are still influenced by the front-end, CALCS computation of TDCFs.

So -- let's walk through that for a second.

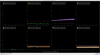

The CALCS-computed uncertainty for each TDCF is based on the coherence between the calibration lines and DARM_ERR -- but in a crude, lazy way that we thought would be good enough in 2018 -- see G1801594, page 13. I've captured a current screenshot, First Image Attachment of the now-times simulink model to confirm the algorithm is still the same as it was prior to O3.

In short, the uncertainty for the actuator strengths, \kappa_U, \kappa_P, and \kappa_T, is created by simply taking the larger of the two calibration line transfer functions' uncertainty that go in to computing that TDCF -- SUS_LINE[1,2,3] or PCAL_LINE1.

HOWEVER -- because the optical and cavity pole, \kappa_C and f_CC, calculation depends on subtracting out the live DARM actuator (see appearance "A(f,t)" in the definition of "S(f,t)" in Eq. 17 from ), their uncertainty is crafted from the largest of the \kappa_U, \kappa_P, and \kappa_T, AND PCAL_LINE2 uncertainties. It's the same uncertainty for both \kappa_C and f_CC, since they're both derived from the magnitude and phase of the same PCAL_LINE2.

That means the large SUS_LINE1 >> \kappa_U uncertainty propagates through this "greatest of" algorithm, and also blows out the \kappa_C and f_CC uncertainty as well -- which triggered the GDS pipeline to gate its 2023-07-20 TDCF values for \kappa_U, \kappa_C, and f_CC from 2023-07-20 to 2023-08-07.

THAT means, that --for better or worse-- when \kappa_C and f_CC are influenced by thermalization for the first ~3 hours after power up, GDS did not correct for it. Thus, a third systematic error in GDS, (3).

*sigh*

OK, let's look at some plots.

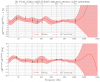

My Second Image Attachment shows a trend of all the front-end computed uncertainties involved around 2023-07-20 when the bad MICH FF is installed.

:: The first row and last row show that the UIM uncertainty -- and the CAV_POLE uncertainty (again, used for both \kappa_C )

:: Remember GDS gates its TDCFs with a threshold of uncertainty = 0.005 (i.e. 0.5%), where the front-end gates with an uncertainty of 0.05 (i.e. 5%).

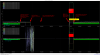

First PDF attachment shows in much more clear detail the *values* of bot the the CALCS and GDS TDCFs during a thermalization time that Joe chose in LHO:72622, 2023-07-26 01:10 UTC.

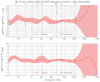

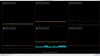

My Second PDF attachment breaks down Joe's LHO:72622 Second Image attachment in to its components:

:: ORANGE shows the correction to the "reference time" response function with the frozen, gated, GDS-computed TDCFs, by the ratio of the "nominal" response function (as computed from the 20230621T211522Z report's pydarm_H1.ini) to that same response function, but with the optical gain, cavity pole, and actuator strengths updated with the frozen GDS TDCF values,

\kappa_C = 0.97828 (frozen that the low, thermalized value of the OM2 HOT value reflecting the unaccounted-for change just one day prior at 2023-07-19; LHO:71484)

f_CC = 444.4 Hz (frozen)

\kappa_U = 1.05196 (frozen at a large, noisy value, right after the MICH FF filter is installed)

\kappa_P = 0.99952 (not frozen)

\kappa_T = 1.03184 (not frozen, large at 3% because of the TST actuation strength drift)

:: BLUE shows the correction to the "reference time" response function with the not-frozen, non-gated, CALCS-computed TDCFs, by the ratio of the "nominal" 20230621T211522Z response function to that same response function updated with the CALCS values,

\kappa_C = 0.95820 (even lower than OM2 HOT value because this time is during thermalization)

f_CC = 448.9 Hz (higher because IFO mode matching and loss are better before the IFO thermalizes)

\kappa_U = 0.98392 (arguably more accurate value, closer to the mean of a very noisy value)

\kappa_P = 0.99763 (the same as GDS, to within noise or uncertainty)

\kappa_T = 0.03073 (the same as GDS, to within noise or uncertainty)

:: GREEN is a ratio of BLUE / ORANGE -- and thus a repeat of what Joe shows in his LHO:72622 Second Image attachment.

Joe was trying to motivate why (1) the missing ESD driver 3.2 kHz pole is a separable problem from (2) and (3), the bad MICH FF filter spoiling the uncertainty in \kappa_U, \kappa_C, and f_CC, so he glossed over this issue. Further what he plotted in his second attachment, and akin to my GREEN curve, is the *ratio* between corrections, not the actually corrections themselves (ORANGE and BLUE) so it kind of hid this difference.